文档简介:

在自然语言处理任务中,词向量(Word Embedding)是表示自然语言里单词的一种方法,即把每个词都表示为一个N维空间内的点,即一个高维空间内的向量。通过这种方法,实现把自然语言计算转换为向量计算。

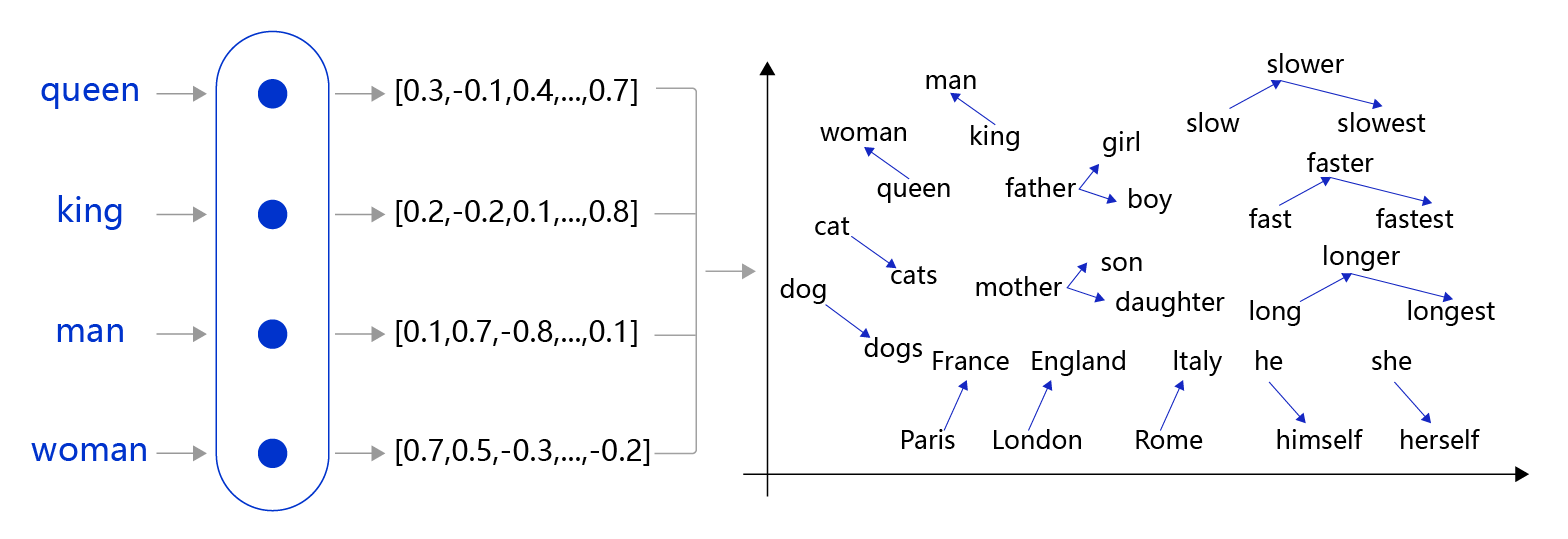

如 图1 所示的词向量计算任务中,先把每个词(如queen,king等)转换成一个高维空间的向量,这些向量在一定意义上可以代表这个词的语义信息。再通过计算这些向量之间的距离,就可以计算出词语之间的关联关系,从而达到让计算机像计算数值一样去计算自然语言的目的。

图1:词向量计算示意图

图1:词向量计算示意图因此,大部分词向量模型都需要回答两个问题:

- 如何把词转换为向量?

自然语言单词是离散信号,比如“香蕉”,“橘子”,“水果”在我们看来就是3个离散的词。

如何把每个离散的单词转换为一个向量?

- 如何让向量具有语义信息?

比如,我们知道在很多情况下,“香蕉”和“橘子”更加相似,而“香蕉”和“句子”就没有那么相似,同时“香蕉”和“食物”、“水果”的相似程度可能介于“橘子”和“句子”之间。

那么,我们该如何让词向量具备这样的语义信息?

如何把词转换为向量

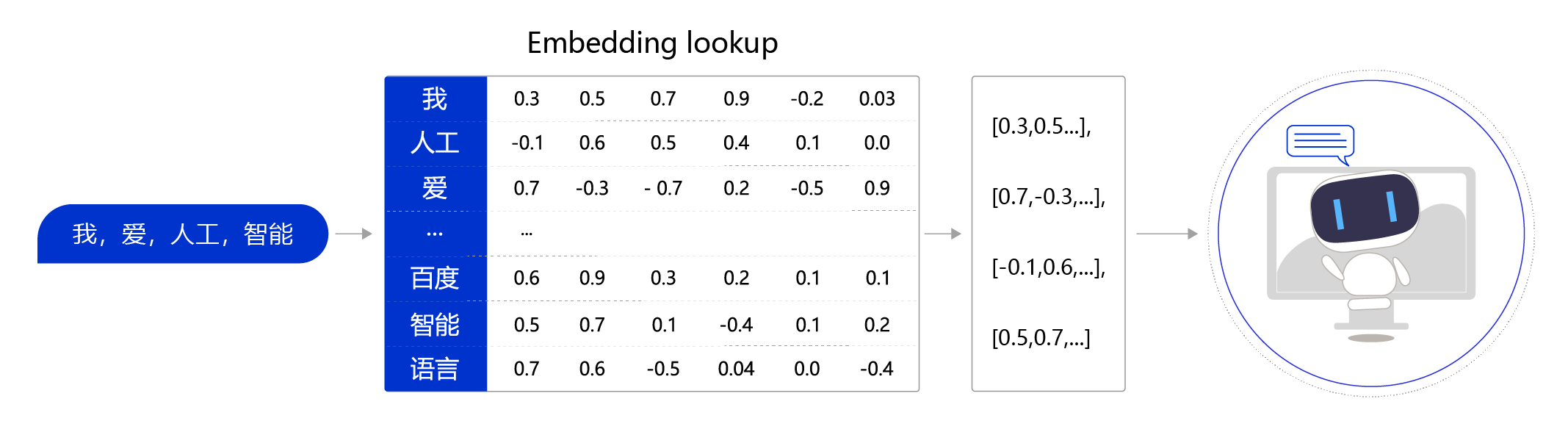

自然语言单词是离散信号,比如“我”、“ 爱”、“人工智能”。如何把每个离散的单词转换为一个向量?通常情况下,我们可以维护一个如 图2 所示的查询表。表中每一行都存储了一个特定词语的向量值,每一列的第一个元素都代表着这个词本身,以便于我们进行词和向量的映射(如“我”对应的向量值为 [0.3,0.5,0.7,0.9,-0.2,0.03] )。给定任何一个或者一组单词,我们都可以通过查询这个excel,实现把单词转换为向量的目的,这个查询和替换过程称之为Embedding Lookup。

图2:词向量查询表

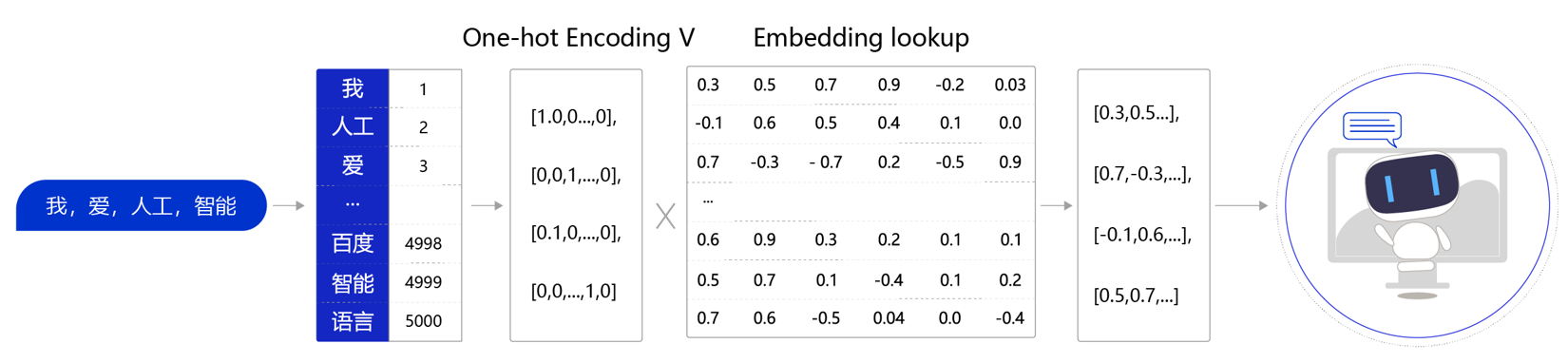

上述过程也可以使用一个字典数据结构实现。事实上如果不考虑计算效率,使用字典实现上述功能是个不错的选择。然而在进行神经网络计算的过程中,需要大量的算力,常常要借助特定硬件(如GPU)满足训练速度的需求。GPU上所支持的计算都是以张量(Tensor)为单位展开的,因此在实际场景中,我们需要把Embedding Lookup的过程转换为张量计算,如 图3 所示。

图3:张量计算示意图

假设对于句子"我,爱,人工,智能",把Embedding Lookup的过程转换为张量计算的流程如下:

-

通过查询字典,先把句子中的单词转换成一个ID(通常是一个大于等于0的整数),这个单词到ID的映射关系可以根据需求自定义(如图3中,我=>1, 人工=>2,爱=>3,…)。

-

得到ID后,再把每个ID转换成一个固定长度的向量。假设字典的词表中有5000个词,那么,对于单词“我”,就可以用一个5000维的向量来表示。由于“我”的ID是1,因此这个向量的第一个元素是1,其他元素都是0([1,0,0,…,0]);同样对于单词“人工”,第二个元素是1,其他元素都是0。用这种方式就实现了用一个向量表示一个单词。由于每个单词的向量表示都只有一个元素为1,而其他元素为0,因此我们称上述过程为One-Hot Encoding。

-

经过One-Hot Encoding后,句子“我,爱,人工,智能”就被转换成为了一个形状为 4×5000的张量,记为VVV。在这个张量里共有4行、5000列,从上到下,每一行分别代表了“我”、“爱”、“人工”、“智能”四个单词的One-Hot Encoding。最后,我们把这个张量VVV和另外一个稠密张量WWW相乘,其中WWW张量的形状为5000 × 128(5000表示词表大小,128表示每个词的向量大小)。经过张量乘法,我们就得到了一个4×128的张量,从而完成了把单词表示成向量的目的。

如何让向量具有语义信息

得到每个单词的向量表示后,我们需要思考下一个问题:比如在多数情况下,“香蕉”和“橘子”更加相似,而“香蕉”和“句子”就没有那么相似;同时,“香蕉”和“食物”、“水果”的相似程度可能介于“橘子”和“句子”之间。那么如何让存储的词向量具备这样的语义信息呢?

我们先学习自然语言处理领域的一个小技巧。在自然语言处理研究中,科研人员通常有一个共识:使用一个单词的上下文来了解这个单词的语义,比如:

“苹果手机质量不错,就是价格有点贵。”

“这个苹果很好吃,非常脆。”

“菠萝质量也还行,但是不如苹果支持的APP多。”

在上面的句子中,我们通过上下文可以推断出第一个“苹果”指的是苹果手机,第二个“苹果”指的是水果苹果,而第三个“菠萝”指的应该也是一个手机。事实上,在自然语言处理领域,使用上下文描述一个词语或者元素的语义是一个常见且有效的做法。我们可以使用同样的方式训练词向量,让这些词向量具备表示语义信息的能力。

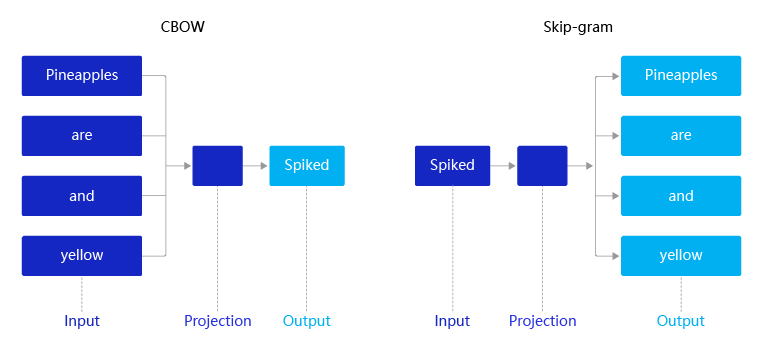

2013年,Mikolov提出的经典word2vec算法就是通过上下文来学习语义信息。word2vec包含两个经典模型:CBOW(Continuous Bag-of-Words)和Skip-gram,如 图4 所示。

- CBOW:通过上下文的词向量推理中心词。

- Skip-gram:根据中心词推理上下文。

图4:CBOW和Skip-gram语义学习示意图

假设有一个句子“Pineapples are spiked and yellow”,两个模型的推理方式如下:

-

在CBOW中,先在句子中选定一个中心词,并把其它词作为这个中心词的上下文。如 图4 CBOW所示,把“Spiked”作为中心词,把“Pineapples、are、and、yellow”作为中心词的上下文。在学习过程中,使用上下文的词向量推理中心词,这样中心词的语义就被传递到上下文的词向量中,如“Spiked → pineapple”,从而达到学习语义信息的目的。

-

在Skip-gram中,同样先选定一个中心词,并把其他词作为这个中心词的上下文。如 图4 Skip-gram所示,把“Spiked”作为中心词,把“Pineapples、are、and、yellow”作为中心词的上下文。不同的是,在学习过程中,使用中心词的词向量去推理上下文,这样上下文定义的语义被传入中心词的表示中,如“pineapple → Spiked”, 从而达到学习语义信息的目的。

说明:

一般来说,CBOW比Skip-gram训练速度快,训练过程更加稳定,原因是CBOW使用上下文average的方式进行训练,每个训练step会见到更多样本。而在生僻字(出现频率低的字)处理上,skip-gram比CBOW效果更好,原因是skip-gram不会刻意回避生僻字(CBOW结构中输入中存在生僻字时,生僻字会被其它非生僻字的权重冲淡)。

Skip-gram的理想实现

使用神经网络实现Skip-gram中,模型接收的输入应该有2个不同的tensor:

-

代表中心词的tensor:假设我们称之为center_words VVV,一般来说,这个tensor是一个形状为[batch_size, vocab_size]的one-hot tensor,表示在一个mini-batch中,每个中心词的ID,对应位置为1,其余为0。

-

代表目标词的tensor:目标词是指需要推理出来的上下文词,假设我们称之为target_words TTT,一般来说,这个tensor是一个形状为[batch_size, 1]的整型tensor,这个tensor中的每个元素是一个[0, vocab_size-1]的值,代表目标词的ID。

在理想情况下,我们可以使用一个简单的方式实现skip-gram。即把需要推理的每个目标词都当成一个标签,把skip-gram当成一个大规模分类任务进行网络构建,过程如下:

- 声明一个形状为[vocab_size, embedding_size]的张量,作为需要学习的词向量,记为W0W_0W0。对于给定的输入VVV,使用向量乘法,将VVV乘以W0W_0W0,这样就得到了一个形状为[batch_size, embedding_size]的张量,记为H=V×W0H=V×W_0H=V×W0。这个张量HHH就可以看成是经过词向量查表后的结果。

- 声明另外一个需要学习的参数W1W_1W1,这个参数的形状为[embedding_size, vocab_size]。将上一步得到的HHH去乘以W1W_1W1,得到一个新的tensor O=H×W1O=H×W_1O=H×W1,此时的OOO是一个形状为[batch_size, vocab_size]的tensor,表示当前这个mini-batch中的每个中心词预测出的目标词的概率。

- 使用softmax函数对mini-batch中每个中心词的预测结果做归一化,即可完成网络构建。

Skip-gram的实际实现

然而在实际情况中,vocab_size通常很大(几十万甚至几百万),导致W0W_0W0和W1W_1W1也会非常大。对于W0W_0W0而言,所参与的矩阵运算并不是通过一个矩阵乘法实现,而是通过指定ID,对参数W0W_0W0进行访存的方式获取。然而对W1W_1W1而言,仍要处理一个非常大的矩阵运算(计算过程非常缓慢,需要消耗大量的内存/显存)。为了缓解这个问题,通常采取负采样(negative_sampling)的方式来近似模拟多分类任务。此时新定义的W0W_0W0和W1W_1W1均为形状为[vocab_size, embedding_size]的张量。

假设有一个中心词ccc和一个上下文词正样本tpt_ptp。在Skip-gram的理想实现里,需要最大化使用ccc推理tpt_ptp的概率。在使用softmax学习时,需要最大化tpt_ptp的推理概率,同时最小化其他词表中词的推理概率。之所以计算缓慢,是因为需要对词表中的所有词都计算一遍。然而我们还可以使用另一种方法,就是随机从词表中选择几个代表词,通过最小化这几个代表词的概率,去近似最小化整体的预测概率。比如,先指定一个中心词(如“人工”)和一个目标词正样本(如“智能”),再随机在词表中采样几个目标词负样本(如“日本”,“喝茶”等)。有了这些内容,我们的skip-gram模型就变成了一个二分类任务。对于目标词正样本,我们需要最大化它的预测概率;对于目标词负样本,我们需要最小化它的预测概率。通过这种方式,我们就可以完成计算加速。上述做法,我们称之为负采样。

在实现的过程中,通常会让模型接收3个tensor输入:

-

代表中心词的tensor:假设我们称之为center_words VVV,一般来说,这个tensor是一个形状为[batch_size, vocab_size]的one-hot tensor,表示在一个mini-batch中每个中心词具体的ID。

-

代表目标词的tensor:假设我们称之为target_words TTT,一般来说,这个tensor同样是一个形状为[batch_size, vocab_size]的one-hot tensor,表示在一个mini-batch中每个目标词具体的ID。

-

代表目标词标签的tensor:假设我们称之为labels LLL,一般来说,这个tensor是一个形状为[batch_size, 1]的tensor,每个元素不是0就是1(0:负样本,1:正样本)。

模型训练过程如下:

- 用VVV去查询W0W_0W0,用TTT去查询W1W_1W1,分别得到两个形状为[batch_size, embedding_size]的tensor,记为H1H_1H1和H2H_2H2。

- 将这两个tensor进行点积运算,最终得到一个形状为[batch_size]的tensor O=[Oi=∑jH0[i,j]×H1[i,j]]i=1batch_sizeO = [O_i = \sum_j H_0[i,j] × H_1[i,j]]_{i=1}^{batch\_size}O=[Oi=∑jH0[i,j]×H1[i,j]]i=1batch_size。

- 使用sigmoid函数作用在OOO上,将上述点积的结果归一化为一个0-1的概率值,作为预测概率,根据标签信息LLL训练这个模型即可。

在结束模型训练之后,一般使用W0W_0W0作为最终要使用的词向量,用W0W_0W0的向量表示。通过向量点乘的方式,计算不同词之间的相似度。

# encoding=utf8 import io import os import sys import requests from collections import

OrderedDict import math import random import numpy as np import paddle from paddle.nn

import Embedding import paddle.nn.functional as F import paddle.nn as nn

# 下载语料用来训练word2vec def download(): # 可以从百度云服务器下载一些开源数据集

(dataset.bj.bcebos.com) corpus_url = "https://dataset.bj.bcebos.com/word2vec/text8.txt"

# 使用python的requests包下载数据集到本地 web_request = requests.get(corpus_url)

corpus = web_request.content # 把下载后的文件存储在当前目录的text8.txt文件内 with open("./text8.txt", "wb") as f:

f.write(corpus)

f.close()

download()

接下来,把下载的语料读取到程序里,并打印前500个字符查看语料的格式,代码如下:

# 读取text8数据 def load_text8(): with open("./text8.txt", "r") as f:

corpus = f.read().strip("\n")

f.close() return corpus

corpus = load_text8() # 打印前500个字符,简要看一下这个语料的样子 print(corpus[:500])

anarchism originated as a term of abuse first used against early working class radicals

including the diggers of the english revolution and the sans culottes of the french

revolution whilst the term is still used in a pejorative way to describe any act that

used violent means to destroy the organization of society it has also been taken up as

a positive label by self defined anarchists the word anarchism is derived from the greek

without archons ruler chief king anarchism as a political philoso

一般来说,在自然语言处理中,需要先对语料进行切词。对于英文来说,可以比较简单地直接使用空格进行切词,代码如下:

# 对语料进行预处理(分词) def data_preprocess(corpus): # 由于英文单词出现在句首的时候经常要大写,

所以我们把所有英文字符都转换为小写, # 以便对语料进行归一化处理(Apple vs apple等) corpus = corpus.strip().lower()

corpus = corpus.split(" ") return corpus

corpus = data_preprocess(corpus)

print(corpus[:50])

['anarchism', 'originated', 'as', 'a', 'term', 'of', 'abuse', 'first', 'used', 'against',

'early', 'working', 'class', 'radicals', 'including', 'the', 'diggers', 'of', 'the',

'english', 'revolution', 'and', 'the', 'sans', 'culottes', 'of', 'the', 'french',

'revolution', 'whilst', 'the', 'term', 'is', 'still', 'used', 'in', 'a', 'pejorative',

'way', 'to', 'describe', 'any', 'act', 'that', 'used', 'violent', 'means', 'to', 'destroy', 'the']

在经过切词后,需要对语料进行统计,为每个词构造ID。一般来说,可以根据每个词在语料中出现的频次构造ID,频次越高,ID越小,便于对词典进行管理。代码如下:

# 构造词典,统计每个词的频率,并根据频率将每个词转换为一个整数id def build_dict(corpus):

# 首先统计每个不同词的频率(出现的次数),使用一个词典记录 word_freq_dict = dict()

for word in corpus: if word not in word_freq_dict:

word_freq_dict[word] = 0 word_freq_dict[word] += 1 # 将这个词典中的词,

按照出现次数排序,出现次数越高,排序越靠前 # 一般来说,出现频率高的高频词往往是:I,

the,you这种代词,而出现频率低的词,往往是一些名词,如:nlp word_freq_dict = sorted(word

_freq_dict.items(), key = lambda x:x[1], reverse = True) # 构造3个不同的词典,分别存储,

# 每个词到id的映射关系:word2id_dict # 每个id出现的频率:word2id_freq # 每个id到词的映射关系:

id2word_dict word2id_dict = dict()

word2id_freq = dict()

id2word_dict = dict() # 按照频率,从高到低,开始遍历每个单词,

并为这个单词构造一个独一无二的id for word, freq in word_freq_dict:

curr_id = len(word2id_dict)

word2id_dict[word] = curr_id

word2id_freq[word2id_dict[word]] = freq

id2word_dict[curr_id] = word return word2id_freq, word2id_dict, id2word_dict

word2id_freq, word2id_dict, id2word_dict = build_dict(corpus)

vocab_size = len(word2id_freq)

print("there are totoally %d different words in the corpus" % vocab_size) for _

, (word, word_id) in zip(range(50), word2id_dict.items()):

print("word %s, its id %d, its word freq %d" % (word, word_id, word2id_freq[word_id]))

there are totoally 253854 different words in the corpus word the, its id 0, its word freq 1061396 word of, its id 1, its word freq 593677 word and, its id 2, its word freq 416629 word one, its id 3, its word freq 411764 word in, its id 4, its word freq 372201 word a, its id 5, its word freq 325873 word to, its id 6, its word freq 316376 word zero, its id 7, its word freq 264975 word nine, its id 8, its word freq 250430 word two, its id 9, its word freq 192644 word is, its id 10, its word freq 183153 word as, its id 11, its word freq 131815 word eight, its id 12, its word freq 125285 word for, its id 13, its word freq 118445 word s, its id 14, its word freq 116710 word five, its id 15, its word freq 115789 word three, its id 16, its word freq 114775 word was, its id 17, its word freq 112807 word by, its id 18, its word freq 111831 word that, its id 19, its word freq 109510 word four, its id 20, its word freq 108182 word six, its id 21, its word freq 102145 word seven, its id 22, its word freq 99683 word with, its id 23, its word freq 95603 word on, its id 24, its word freq 91250 word are, its id 25, its word freq 76527 word it, its id 26, its word freq 73334 word from, its id 27, its word freq 72871 word or, its id 28, its word freq 68945 word his, its id 29, its word freq 62603 word an, its id 30, its word freq 61925 word be, its id 31, its word freq 61281 word this, its id 32, its word freq 58832 word which, its id 33, its word freq 54788 word at, its id 34, its word freq 54576 word he, its id 35, its word freq 53573 word also, its id 36, its word freq 44358 word not, its id 37, its word freq 44033 word have, its id 38, its word freq 39712 word were, its id 39, its word freq 39086 word has, its id 40, its word freq 37866 word but, its id 41, its word freq 35358 word other, its id 42, its word freq 32433 word their, its id 43, its word freq 31523 word its, its id 44, its word freq 29567 word first, its id 45, its word freq 28810 word they, its id 46, its word freq 28553 word some, its id 47, its word freq 28161 word had, its id 48, its word freq 28100 word all, its id 49, its word freq 26229

得到word2id词典后,还需要进一步处理原始语料,把每个词替换成对应的ID,便于神经网络进行处理,代码如下:

# 把语料转换为id序列 def convert_corpus_to_id(corpus, word2id_dict): # 使用一个循环,

将语料中的每个词替换成对应的id,以便于神经网络进行处理 corpus = [word2id_dict[word]

for word in corpus] return corpus

corpus = convert_corpus_to_id(corpus, word2id_dict)

print("%d tokens in the corpus" % len(corpus))

print(corpus[:50])

17005207 tokens in the corpus [5233, 3080, 11, 5, 194, 1, 3133, 45, 58, 155, 127, 741, 476, 10571, 133, 0, 27349, 1, 0,

102, 854, 2, 0, 15067, 58112, 1, 0, 150, 854, 3580, 0, 194, 10, 190, 58, 4, 5, 10712, 214,

6, 1324, 104, 454, 19, 58, 2731, 362, 6, 3672, 0]

接下来,需要使用二次采样法处理原始文本。二次采样法的主要思想是降低高频词在语料中出现的频次。方法是随机将高频的词抛弃,频率越高,被抛弃的概率就越大;频率越低,被抛弃的概率就越小。标点符号或冠词这样的高频词就会被抛弃,从而优化整个词表的词向量训练效果,代码如下:

# 使用二次采样算法(subsampling)处理语料,强化训练效果 def subsampling(corpus, word2id_freq)

: # 这个discard函数决定了一个词会不会被替换,这个函数是具有随机性的,每次调用结果不同 #

如果一个词的频率很大,那么它被遗弃的概率就很大 def discard(word_id): return random.uniform(0, 1)

< 1 - math.sqrt( 1e-4 / word2id_freq[word_id] * len(corpus))

corpus = [word for word in corpus if not discard(word)] return corpus

corpus = subsampling(corpus, word2id_freq)

print("%d tokens in the corpus" % len(corpus))

print(corpus[:50])

8745548 tokens in the corpus [5233, 3080, 3133, 58, 741, 476, 10571, 27349, 102, 854, 15067, 58112, 854, 3580, 10712, 1324,

104, 2731, 6, 3672, 708, 371, 539, 1423, 2757, 567, 686, 7088, 5233, 1052, 320, 44611, 2877,

792, 186, 5233, 200, 602, 1134, 2621, 8983, 279, 4147, 6437, 4186, 153, 362, 5233, 1137, 447]

在完成语料数据预处理之后,需要构造训练数据。根据上面的描述,我们需要使用一个滑动窗口对语料从左到右扫描,在每个窗口内,中心词需要预测它的上下文,并形成训练数据。

在实际操作中,由于词表往往很大(50000,100000等),对大词表的一些矩阵运算(如softmax)需要消耗巨大的资源,因此可以通过负采样的方式模拟softmax的结果。

- 给定一个中心词和一个需要预测的上下文词,把这个上下文词作为正样本。

- 通过词表随机采样的方式,选择若干个负样本。

- 把一个大规模分类问题转化为一个2分类问题,通过这种方式优化计算速度。

# 构造数据,准备模型训练 # max_window_size代表了最大的window_size的大小,程序会根据

max_window_size从左到右扫描整个语料 # negative_sample_num代表了对于每个正样本,

我们需要随机采样多少负样本用于训练, # 一般来说,negative_sample_num的值越大,

训练效果越稳定,但是训练速度越慢。 def build_data(corpus, word2id_dict,

word2id_freq, max_window_size = 3, negative_sample_num = 4): # 使用一个list存储处理好的数据

dataset = [] # 从左到右,开始枚举每个中心点的位置 for center_word_idx in range(len(corpus)):

# 以max_window_size为上限,随机采样一个window_size,这样会使得训练更加稳定 window_size

= random.randint(1, max_window_size) # 当前的中心词就是center_word_idx所指向的词 center_word

= corpus[center_word_idx] # 以当前中心词为中心,左右两侧在window_size内的词都可以看成是正样本

positive_word_range = (max(0, center_word_idx - window_size), min(len(corpus) - 1,

center_word_idx + window_size))

positive_word_candidates = [corpus[idx] for idx in range(positive_word_range[0],

positive_word_range[1]+1) if idx != center_word_idx] # 对于每个正样本来说,

随机采样negative_sample_num个负样本,用于训练 for positive_word in positive_word_candidates:

# 首先把(中心词,正样本,label=1)的三元组数据放入dataset中, # 这里label=1表示这个样本是个正样本

dataset.append((center_word, positive_word, 1)) # 开始负采样 i = 0 while i < negative_sample_num:

negative_word_candidate = random.randint(0, vocab_size-1) if negative_word_candidate not in

positive_word_candidates: # 把(中心词,正样本,label=0)的三元组数据放入dataset中,

# 这里label=0表示这个样本是个负样本 dataset.append((center_word, negative_word_candidate, 0))

i += 1 return dataset

corpus_light = corpus[:int(len(corpus)*0.2)]

dataset = build_data(corpus_light, word2id_dict, word2id_freq) for _,

(center_word, target_word, label) in zip(range(50), dataset):

print("center_word %s, target %s, label %d" % (id2word_dict[center_word],

id2word_dict[target_word], label))

center_word anarchism, target originated, label 1 center_word anarchism, target kdepim, label 0 center_word anarchism, target mahoney, label 0 center_word anarchism, target epitomises, label 0 center_word anarchism, target girotti, label 0 center_word anarchism, target abuse, label 1 center_word anarchism, target spud, label 0 center_word anarchism, target productivity, label 0 center_word anarchism, target minyans, label 0 center_word anarchism, target baluchi, label 0 center_word anarchism, target used, label 1 center_word anarchism, target enshrinement, label 0 center_word anarchism, target ivtran, label 0 center_word anarchism, target ladna, label 0 center_word anarchism, target urau, label 0 center_word originated, target anarchism, label 1 center_word originated, target giba, label 0 center_word originated, target intermixable, label 0 center_word originated, target domacho, label 0 center_word originated, target ricercar, label 0 center_word originated, target abuse, label 1 center_word originated, target einn, label 0 center_word originated, target meganyctiphanes, label 0 center_word originated, target heliers, label 0 center_word originated, target plou, label 0 center_word originated, target used, label 1 center_word originated, target cerion, label 0 center_word originated, target lehnsherr, label 0 center_word originated, target greetland, label 0 center_word originated, target zell, label 0 center_word originated, target working, label 1 center_word originated, target osn, label 0 center_word originated, target greenburg, label 0 center_word originated, target verrine, label 0 center_word originated, target parrallel, label 0 center_word abuse, target anarchism, label 1 center_word abuse, target hala, label 0 center_word abuse, target prototypically, label 0 center_word abuse, target hreini, label 0 center_word abuse, target villeggiatura, label 0 center_word abuse, target originated, label 1 center_word abuse, target chaomh, label 0 center_word abuse, target nimzowitch, label 0 center_word abuse, target micropatrology, label 0 center_word abuse, target alterum, label 0 center_word abuse, target used, label 1 center_word abuse, target quackmore, label 0 center_word abuse, target pristina, label 0 center_word abuse, target narrenturm, label 0 center_word abuse, target femi, label 0

训练数据准备好后,把训练数据都组装成mini-batch,并准备输入到网络中进行训练,代码如下:

# 构造mini-batch,准备对模型进行训练 # 我们将不同类型的数据放到不同的tensor里,

便于神经网络进行处理 # 并通过numpy的array函数,构造出不同的tensor来,并把这些tensor

送入神经网络中进行训练 def build_batch(dataset, batch_size, epoch_num):

# center_word_batch缓存batch_size个中心词 center_word_batch = [] # target_word_batch缓存

batch_size个目标词(可以是正样本或者负样本) target_word_batch = [] # label_batch缓存了

batch_size个0或1的标签,用于模型训练 label_batch = [] for epoch in range(epoch_num):

# 每次开启一个新epoch之前,都对数据进行一次随机打乱,提高训练效果 random.shuffle(dataset)

for center_word, target_word, label in dataset: # 遍历dataset中的每个样本,并将这些数据送到不同的tensor里

center_word_batch.append([center_word])

target_word_batch.append([target_word])

label_batch.append(label) # 当样本积攒到一个batch_size后,我们把数据都返回回来

# 在这里我们使用numpy的array函数把list封装成tensor # 并使用python的迭代器机制,将数据yield出来 #

使用迭代器的好处是可以节省内存 if len(center_word_batch) == batch_size: yield np

.array(center_word_batch).astype("int64"), \

np.array(target_word_batch).astype("int64"), \

np.array(label_batch).astype("float32")

center_word_batch = []

target_word_batch = []

label_batch = [] if len(center_word_batch) > 0: yield np.array(center_word_batch).astype("int64"), \

np.array(target_word_batch).astype("int64"), \

np.array(label_batch).astype("float32") for _, batch in zip(range(10), build_batch(dataset, 128, 3)):

print(batch) break

(array([[ 67094],

[ 1246],

[ 269],

[ 40],

[ 62],

[ 5437],

[ 254],

[ 5640],

[ 1],

[ 2720],

[143153],

[ 24],

[ 2792],

[ 51456],

[ 1193],

[ 6718],

[ 42478],

[ 53966],

[ 78],

[ 1473],

[ 17262],

[ 51074],

[ 867],

[ 1443],

[ 282],

[ 1],

[ 2203],

[ 617],

[ 2549],

[ 511],

[ 26890],

[ 6478],

[ 340],

[ 629],

[ 2597],

[ 48],

[ 8822],

[ 994],

[ 774],

[ 4622],

[ 3828],

[ 2161],

[ 954],

[ 26342],

[ 8],

[ 24797],

[ 120],

[ 0],

[ 910],

[ 29156],

[ 1154],

[ 792],

[ 37],

[ 8852],

[ 3832],

[ 754],

[ 18863],

[ 4923],

[ 65164],

[ 4738],

[103207],

[ 26567],

[ 4778],

[ 4829],

[ 37],

[ 2626],

[ 480],

[ 99],

[ 1549],

[ 7298],

[ 5718],

[ 10882],

[ 2149],

[ 1338],

[ 3861],

[ 7],

[ 5883],

[ 2686],

[ 59392],

[ 5],

[ 492],

[ 78],

[ 10658],

[ 462],

[ 10520],

[ 12085],

[ 1026],

[ 972],

[ 7210],

[ 10],

[ 13144],

[ 53],

[ 755],

[ 85],

[ 2822],

[ 97],

[ 17285],

[ 3113],

[ 483],

[ 665],

[ 60408],

[ 1064],

[ 16389],

[ 317],

[ 991],

[ 742],

[ 7987],

[ 1389],

[ 901],

[ 5229],

[ 82742],

[ 1],

[ 4224],

[ 9371],

[ 1136],

[ 3108],

[ 16],

[ 146],

[ 6058],

[ 3586],

[ 2711],

[ 2523],

[ 425],

[ 1639],

[ 1066],

[ 535],

[ 35],

[ 275]]), array([[ 9630],

[ 49061],

[175120],

[ 46346],

[171294],

[ 12062],

[ 2995],

[ 3257],

[ 25517],

[ 31419],

[143154],

[103397],

[ 73462],

[ 23919],

[248332],

[111682],

[151543],

[ 5023],

[ 36705],

[175351],

[ 85586],

[200897],

[ 95870],

[236052],

[112839],

[170774],

[153366],

[144690],

[ 4946],

[131688],

[ 85193],

[ 98611],

[ 87780],

[253121],

[142753],

[106440],

[223610],

[152308],

[ 2767],

[160780],

[ 16771],

[ 98474],

[ 61807],

[245053],

[207477],

[ 6056],

[ 25902],

[ 45111],

[ 402],

[ 5862],

[ 45677],

[143169],

[142246],

[ 89868],

[ 22800],

[ 727],

[239176],

[102293],

[ 67371],

[ 2385],

[ 1217],

[ 33167],

[198018],

[ 32016],

[242525],

[192277],

[ 6367],

[179017],

[ 82478],

[ 55694],

[233023],

[ 20392],

[205578],

[140934],

[ 57024],

[113381],

[ 45499],

[192023],

[ 60521],

[ 367],

[106530],

[170655],

[ 62683],

[ 56127],

[152971],

[ 6085],

[ 6556],

[ 63958],

[115295],

[186517],

[137820],

[114494],

[238139],

[131404],

[122564],

[ 38698],

[160346],

[ 1352],

[ 4092],

[224773],

[222940],

[ 42741],

[178002],

[ 8287],

[ 10076],

[202071],

[223865],

[ 126],

[ 796],

[147081],

[231743],

[ 94084],

[ 53],

[ 23619],

[225183],

[141744],

[130984],

[249454],

[144171],

[239574],

[253434],

[ 22969],

[188237],

[134192],

[ 1762],

[ 69854],

[116832],

[ 38578]]), array([1., 0., 0., 0., 0., 1., 1., 0., 0., 0., 1., 0., 0., 1., 0., 0., 0.,

1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.,

0., 0., 0., 0., 1., 0., 0., 0., 0., 0., 0., 0., 1., 0., 1., 1., 0.,

0., 0., 0., 0., 1., 0., 0., 0., 1., 1., 0., 0., 0., 0., 0., 1., 0.,

0., 0., 0., 1., 0., 0., 0., 0., 1., 0., 0., 1., 0., 0., 0., 0., 0.,

0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0., 0., 1., 1., 0., 0., 0.,

0., 0., 1., 0., 0., 1., 1., 0., 0., 0., 1., 0., 0., 0., 0., 0., 0.,

0., 0., 0., 0., 0., 1., 0., 0., 0.], dtype=float32))

网络定义

定义skip-gram的网络结构,用于模型训练。在飞桨动态图中,对于任意网络,都需要定义一个继承自paddle.nn.layer的类来搭建网络结构、参数等数据的声明。同时需要在forward函数中定义网络的计算逻辑。值得注意的是,我们仅需要定义网络的前向计算逻辑,飞桨会自动完成神经网络的后向计算。

在skip-gram的网络结构中,使用的最关键的API是paddle.nn.Embedding函数,可以用其实现Embedding的网络层。通过查询飞桨的API文档,可以得到如下更详细的说明:

paddle.nn.Embedding(numembeddings, embeddingdim, paddingidx=None, sparse=False, weightattr=None, name=None)

该接口用于构建 Embedding 的一个可调用对象,其根据input中的id信息从embedding矩阵中查询对应embedding信息,并会根据输入的size (num_embeddings, embedding_dim)自动构造一个二维embedding矩阵。 输出Tensor的shape是在输入Tensor shape的最后一维后面添加了emb_size的维度。注:input中的id必须满足 0 =< id < size[0],否则程序会抛异常退出。

#定义skip-gram训练网络结构 #使用paddlepaddle的2.0.0版本 #一般来说,在使用paddle训练的时候,

我们需要通过一个类来定义网络结构,这个类继承了paddle.nn.layer class SkipGram(nn.Layer):

def __init__(self, vocab_size, embedding_size, init_scale=0.1): # vocab_size定义了这个skipgram

这个模型的词表大小 # embedding_size定义了词向量的维度是多少 # init_scale定义了词向量初始化的范围,

一般来说,比较小的初始化范围有助于模型训练 super(SkipGram, self).__init__()

self.vocab_size = vocab_size

self.embedding_size = embedding_size # 使用Embedding函数构造一个词向量参数 #

这个参数的大小为:[self.vocab_size, self.embedding_size] # 数据类型为:float32 #

这个参数的初始化方式为在[-init_scale, init_scale]区间进行均匀采样 self.embedding = Embedding(

num_embeddings = self.vocab_size,

embedding_dim = self.embedding_size,

weight_attr=paddle.ParamAttr(

initializer=paddle.nn.initializer.Uniform(

low=-init_scale, high=init_scale))) # 使用Embedding函数构造另外一个词向量参数

# 这个参数的大小为:[self.vocab_size, self.embedding_size] # 这个参数的初始化方式为在[-init_scale,

init_scale]区间进行均匀采样 self.embedding_out = Embedding(

num_embeddings = self.vocab_size,

embedding_dim = self.embedding_size,

weight_attr=paddle.ParamAttr(

initializer=paddle.nn.initializer.Uniform(

low=-init_scale, high=init_scale))) # 定义网络的前向计算逻辑 # center_words

是一个tensor(mini-batch),表示中心词 # target_words是一个tensor(mini-batch),表示目标词

# label是一个tensor(mini-batch),表示这个词是正样本还是负样本(用0或1表示) #

用于在训练中计算这个tensor中对应词的同义词,用于观察模型的训练效果 def forward(self,

center_words, target_words, label): # 首先,通过self.embedding参数,将mini-batch中的词转换为词向量

# 这里center_words和eval_words_emb查询的是一个相同的参数 # 而target_words_emb查询的是另一个参数

center_words_emb = self.embedding(center_words)

target_words_emb = self.embedding_out(target_words) # 我们通过点乘的方式计算中心

词到目标词的输出概率,并通过sigmoid函数估计这个词是正样本还是负样本的概率。

word_sim = paddle.multiply(center_words_emb, target_words_emb)

word_sim = paddle.sum(word_sim, axis=-1)

word_sim = paddle.reshape(word_sim, shape=[-1])

pred = F.sigmoid(word_sim) # 通过估计的输出概率定义损失函数,注意我们使用的是

binary_cross_entropy_with_logits函数 # 将sigmoid计算和cross entropy合并成一步计算可以更好的优化,

所以输入的是word_sim,而不是pred loss = F.binary_cross_entropy_with_logits(word_sim, label)

loss = paddle.mean(loss) # 返回前向计算的结果,

飞桨会通过backward函数自动计算出反向结果。 return pred, loss

# 开始训练,定义一些训练过程中需要使用的超参数 batch_size = 512 epoch_num = 3 embedding_size

= 200 step = 0 learning_rate = 0.001 #定义一个使用word-embedding查询同义词的函数

#这个函数query_token是要查询的词,k表示要返回多少个最相似的词,embed是我们学习到的word-embedding参数

#我们通过计算不同词之间的cosine距离,来衡量词和词的相似度 #具体实现如下,x代表要查询词的Embedding,

Embedding参数矩阵W代表所有词的Embedding #两者计算Cos得出所有词对查询词的相似度得分向量,

排序取top_k放入indices列表 def get_similar_tokens(query_token, k, embed): W = embed.numpy()

x = W[word2id_dict[query_token]]

cos = np.dot(W, x) / np.sqrt(np.sum(W * W, axis=1) * np.sum(x * x) + 1e-9)

flat = cos.flatten()

indices = np.argpartition(flat, -k)[-k:]

indices = indices[np.argsort(-flat[indices])] for i in indices:

print('for word %s, the similar word is %s' % (query_token, str(id2word_dict[i])))

# 通过我们定义的SkipGram类,来构造一个Skip-gram模型网络 skip_gram_model = SkipGram(vocab_size,

embedding_size) # 构造训练这个网络的优化器 adam = paddle.optimizer.Adam(learning_rate=learning_rate,

parameters = skip_gram_model.parameters()) # 使用build_batch函数,以mini-batch为单位,遍历训练数据,

并训练网络 for center_words, target_words, label in build_batch(

dataset, batch_size, epoch_num): # 使用paddle.to_tensor,将一个numpy的tensor,

转换为飞桨可计算的tensor center_words_var = paddle.to_tensor(center_words)

target_words_var = paddle.to_tensor(target_words)

label_var = paddle.to_tensor(label) # 将转换后的tensor送入飞桨中,进行一次前向计算,

并得到计算结果 pred, loss = skip_gram_model(

center_words_var, target_words_var, label_var) # 程序自动完成反向计算 loss.backward()

# 程序根据loss,完成一步对参数的优化更新 adam.step() # 清空模型中的梯度,以便于下一个mini-batch进行更新

adam.clear_grad() # 每经过100个mini-batch,打印一次当前的loss,

看看loss是否在稳定下降 step += 1 if step % 1000 == 0:

print("step %d, loss %.3f" % (step, loss.numpy()[0])) # 每隔10000步,

打印一次模型对以下查询词的相似词,这里我们使用词和词之间的向量点积作为衡量相似度的方法,

只打印了5个最相似的词 if step % 10000 ==0:

get_similar_tokens('movie', 5, skip_gram_model.embedding.weight)

get_similar_tokens('one', 5, skip_gram_model.embedding.weight)

get_similar_tokens('chip', 5, skip_gram_model.embedding.weight)

W0510 10:08:14.728967 99 gpu_context.cc:244] Please NOTE: device: 0, GPU Compute Capability: 7.0,

Driver API Version: 11.0, Runtime API Version: 10.1 W0510 10:08:14.733274 99 gpu_context.cc:272] device: 0, cuDNN Version: 7.6.

step 1000, loss 0.692 step 2000, loss 0.684 step 3000, loss 0.620 step 4000, loss 0.511 step 5000, loss 0.391 step 6000, loss 0.292 step 7000, loss 0.275 step 8000, loss 0.267 step 9000, loss 0.241 step 10000, loss 0.244 for word movie, the similar word is movie for word movie, the similar word is amigaos for word movie, the similar word is northwestern for word movie, the similar word is european for word movie, the similar word is fuller for word one, the similar word is one for word one, the similar word is four for word one, the similar word is three for word one, the similar word is november for word one, the similar word is nine for word chip, the similar word is chip for word chip, the similar word is instances for word chip, the similar word is publicly for word chip, the similar word is integers for word chip, the similar word is lasting step 11000, loss 0.211 step 12000, loss 0.212 step 13000, loss 0.215 step 14000, loss 0.225 step 15000, loss 0.181 step 16000, loss 0.234 step 17000, loss 0.199 step 18000, loss 0.251 step 19000, loss 0.181 step 20000, loss 0.137 for word movie, the similar word is movie for word movie, the similar word is rosenberg for word movie, the similar word is booed for word movie, the similar word is pacifist for word movie, the similar word is algarve for word one, the similar word is one for word one, the similar word is eight for word one, the similar word is three for word one, the similar word is five for word one, the similar word is seven for word chip, the similar word is chip for word chip, the similar word is tumors for word chip, the similar word is instability for word chip, the similar word is workshops for word chip, the similar word is vfl step 21000, loss 0.192 step 22000, loss 0.168 step 23000, loss 0.254 step 24000, loss 0.213 step 25000, loss 0.209 step 26000, loss 0.187 step 27000, loss 0.255 step 28000, loss 0.187 step 29000, loss 0.229 step 30000, loss 0.276 for word movie, the similar word is movie for word movie, the similar word is canals for word movie, the similar word is animating for word movie, the similar word is stabs for word movie, the similar word is heywood for word one, the similar word is one for word one, the similar word is six for word one, the similar word is three for word one, the similar word is nine for word one, the similar word is eight for word chip, the similar word is chip for word chip, the similar word is brocklin for word chip, the similar word is eof for word chip, the similar word is oistrakh for word chip, the similar word is purists step 31000, loss 0.196 step 32000, loss 0.225 step 33000, loss 0.235 step 34000, loss 0.226 step 35000, loss 0.219 step 36000, loss 0.188 step 37000, loss 0.194 step 38000, loss 0.187 step 39000, loss 0.135 step 40000, loss 0.207 for word movie, the similar word is movie for word movie, the similar word is looked for word movie, the similar word is lisa for word movie, the similar word is one for word movie, the similar word is sebastien for word one, the similar word is one for word one, the similar word is nine for word one, the similar word is six for word one, the similar word is eight for word one, the similar word is seven for word chip, the similar word is chip for word chip, the similar word is faria for word chip, the similar word is nefeld for word chip, the similar word is brocklin for word chip, the similar word is invigoration step 41000, loss 0.222 step 42000, loss 0.255 step 43000, loss 0.223 step 44000, loss 0.162 step 45000, loss 0.173 step 46000, loss 0.186 step 47000, loss 0.171 step 48000, loss 0.179 step 49000, loss 0.232 step 50000, loss 0.191 for word movie, the similar word is movie for word movie, the similar word is fielding for word movie, the similar word is drowned for word movie, the similar word is lyrics for word movie, the similar word is couch for word one, the similar word is one for word one, the similar word is nine for word one, the similar word is six for word one, the similar word is eight for word one, the similar word is zero for word chip, the similar word is chip for word chip, the similar word is faria for word chip, the similar word is invigoration for word chip, the similar word is segeju for word chip, the similar word is surifarm step 51000, loss 0.163 step 52000, loss 0.201 step 53000, loss 0.179 step 54000, loss 0.143 step 55000, loss 0.240 step 56000, loss 0.154 step 57000, loss 0.212 step 58000, loss 0.149 step 59000, loss 0.242 step 60000, loss 0.147 for word movie, the similar word is movie for word movie, the similar word is fiction for word movie, the similar word is album for word movie, the similar word is blaxploitation for word movie, the similar word is best for word one, the similar word is one for word one, the similar word is eight for word one, the similar word is nine for word one, the similar word is three for word one, the similar word is six for word chip, the similar word is chip for word chip, the similar word is processor for word chip, the similar word is faria for word chip, the similar word is segeju for word chip, the similar word is bytes step 61000, loss 0.236 step 62000, loss 0.147 step 63000, loss 0.197 step 64000, loss 0.151 step 65000, loss 0.140 step 66000, loss 0.183 step 67000, loss 0.184 step 68000, loss 0.236 step 69000, loss 0.123 step 70000, loss 0.132 for word movie, the similar word is movie for word movie, the similar word is album for word movie, the similar word is animated for word movie, the similar word is hollywood for word movie, the similar word is madonna for word one, the similar word is one for word one, the similar word is nine for word one, the similar word is six for word one, the similar word is five for word one, the similar word is zero for word chip, the similar word is chip for word chip, the similar word is processor for word chip, the similar word is controller for word chip, the similar word is cpu for word chip, the similar word is api step 71000, loss 0.148 step 72000, loss 0.165 step 73000, loss 0.154 step 74000, loss 0.125 step 75000, loss 0.156 step 76000, loss 0.156 step 77000, loss 0.130 step 78000, loss 0.131 step 79000, loss 0.194 step 80000, loss 0.146 for word movie, the similar word is movie for word movie, the similar word is starring for word movie, the similar word is glenn for word movie, the similar word is movies for word movie, the similar word is novels for word one, the similar word is one for word one, the similar word is six for word one, the similar word is birthplace for word one, the similar word is prutenische for word one, the similar word is conductor for word chip, the similar word is chip for word chip, the similar word is processor for word chip, the similar word is controller for word chip, the similar word is cpu for word chip, the similar word is kb step 81000, loss 0.167 step 82000, loss 0.174 step 83000, loss 0.147 step 84000, loss 0.133 step 85000, loss 0.145 step 86000, loss 0.200 step 87000, loss 0.196 step 88000, loss 0.110 step 89000, loss 0.111 step 90000, loss 0.158 for word movie, the similar word is movie for word movie, the similar word is hank for word movie, the similar word is glenn for word movie, the similar word is lynch for word movie, the similar word is terry for word one, the similar word is one for word one, the similar word is collins for word one, the similar word is birthplace for word one, the similar word is cartoonist for word one, the similar word is richardson for word chip, the similar word is chip for word chip, the similar word is controller for word chip, the similar word is processor for word chip, the similar word is api for word chip, the similar word is macs step 91000, loss 0.133 step 92000, loss 0.118 step 93000, loss 0.094 step 94000, loss 0.155 step 95000, loss 0.145 step 96000, loss 0.116 step 97000, loss 0.138 step 98000, loss 0.268 step 99000, loss 0.145 step 100000, loss 0.208 for word movie, the similar word is movie for word movie, the similar word is watching for word movie, the similar word is blaxploitation for word movie, the similar word is hank for word movie, the similar word is debut for word one, the similar word is one for word one, the similar word is southerly for word one, the similar word is cartoonist for word one, the similar word is conductor for word one, the similar word is annie for word chip, the similar word is chip for word chip, the similar word is processor for word chip, the similar word is controller for word chip, the similar word is api for word chip, the similar word is macs step 101000, loss 0.170 step 102000, loss 0.196 step 103000, loss 0.172 step 104000, loss 0.138 step 105000, loss 0.205 step 106000, loss 0.149 step 107000, loss 0.123 step 108000, loss 0.188 step 109000, loss 0.197 step 110000, loss 0.180 for word movie, the similar word is movie for word movie, the similar word is hank for word movie, the similar word is starring for word movie, the similar word is albums for word movie, the similar word is watching for word one, the similar word is one for word one, the similar word is cartoonist for word one, the similar word is conductor for word one, the similar word is annie for word one, the similar word is lili for word chip, the similar word is chip for word chip, the similar word is controller for word chip, the similar word is macs for word chip, the similar word is processor for word chip, the similar word is converter step 111000, loss 0.153 step 112000, loss 0.172 step 113000, loss 0.155 step 114000, loss 0.145 step 115000, loss 0.164 step 116000, loss 0.143 step 117000, loss 0.127 step 118000, loss 0.154 step 119000, loss 0.174 step 120000, loss 0.194 for word movie, the similar word is movie for word movie, the similar word is aerialbot for word movie, the similar word is disney for word movie, the similar word is toured for word movie, the similar word is cube for word one, the similar word is one for word one, the similar word is conductor for word one, the similar word is marius for word one, the similar word is cartoonist for word one, the similar word is annie for word chip, the similar word is chip for word chip, the similar word is controller for word chip, the similar word is customized for word chip, the similar word is macs for word chip, the similar word is converter step 121000, loss 0.144 step 122000, loss 0.121 step 123000, loss 0.209 step 124000, loss 0.184 step 125000, loss 0.120 step 126000, loss 0.127 step 127000, loss 0.179 step 128000, loss 0.106 step 129000, loss 0.138 step 130000, loss 0.084 for word movie, the similar word is movie for word movie, the similar word is disney for word movie, the similar word is cube for word movie, the similar word is blaxploitation for word movie, the similar word is watching for word one, the similar word is one for word one, the similar word is southerly for word one, the similar word is publishes for word one, the similar word is cartoonist for word one, the similar word is zino for word chip, the similar word is chip for word chip, the similar word is controller for word chip, the similar word is api for word chip, the similar word is processor for word chip, the similar word is macs step 131000, loss 0.165 step 132000, loss 0.211 step 133000, loss 0.134 step 134000, loss 0.133 step 135000, loss 0.148 step 136000, loss 0.162 step 137000, loss 0.067 step 138000, loss 0.082 step 139000, loss 0.066 step 140000, loss 0.103 for word movie, the similar word is movie for word movie, the similar word is disney for word movie, the similar word is watching for word movie, the similar word is vince for word movie, the similar word is lynch for word one, the similar word is one for word one, the similar word is publishes for word one, the similar word is southerly for word one, the similar word is apointed for word one, the similar word is melbourne for word chip, the similar word is chip for word chip, the similar word is controller for word chip, the similar word is cpu for word chip, the similar word is macs for word chip, the similar word is kb step 141000, loss 0.075 step 142000, loss 0.080 step 143000, loss 0.098 step 144000, loss 0.064 step 145000, loss 0.144 step 146000, loss 0.072 step 147000, loss 0.085 step 148000, loss 0.093 step 149000, loss 0.089 step 150000, loss 0.052 for word movie, the similar word is movie for word movie, the similar word is watching for word movie, the similar word is disney for word movie, the similar word is vince for word movie, the similar word is hank for word one, the similar word is one for word one, the similar word is athlete for word one, the similar word is trionfo for word one, the similar word is cartoonist for word one, the similar word is apointed for word chip, the similar word is chip for word chip, the similar word is controller for word chip, the similar word is cpu for word chip, the similar word is kb for word chip, the similar word is macs step 151000, loss 0.064 step 152000, loss 0.071 step 153000, loss 0.147 step 154000, loss 0.084 step 155000, loss 0.098 step 156000, loss 0.136 step 157000, loss 0.059 step 158000, loss 0.076 step 159000, loss 0.076 step 160000, loss 0.087 for word movie, the similar word is movie for word movie, the similar word is vince for word movie, the similar word is directed for word movie, the similar word is cube for word movie, the similar word is aerialbot for word one, the similar word is one for word one, the similar word is athlete for word one, the similar word is apointed for word one, the similar word is leopold for word one, the similar word is trionfo for word chip, the similar word is chip for word chip, the similar word is controller for word chip, the similar word is cpu for word chip, the similar word is api for word chip, the similar word is windows step 161000, loss 0.046 step 162000, loss 0.067 step 163000, loss 0.068 step 164000, loss 0.104 step 165000, loss 0.107 step 166000, loss 0.058 step 167000, loss 0.080 step 168000, loss 0.114 step 169000, loss 0.100 step 170000, loss 0.079 for word movie, the similar word is movie for word movie, the similar word is vince for word movie, the similar word is bassist for word movie, the similar word is hank for word movie, the similar word is aerialbot for word one, the similar word is one for word one, the similar word is athlete for word one, the similar word is trionfo for word one, the similar word is cartoonist for word one, the similar word is nc for word chip, the similar word is chip for word chip, the similar word is controller for word chip, the similar word is cpu for word chip, the similar word is api for word chip, the similar word is paint step 171000, loss 0.060 step 172000, loss 0.092 step 173000, loss 0.114 step 174000, loss 0.079 step 175000, loss 0.094 step 176000, loss 0.093 step 177000, loss 0.127 step 178000, loss 0.053 step 179000, loss 0.087 step 180000, loss 0.144 for word movie, the similar word is movie for word movie, the similar word is watching for word movie, the similar word is aerialbot for word movie, the similar word is hank for word movie, the similar word is vince for word one, the similar word is one for word one, the similar word is athlete for word one, the similar word is trionfo for word one, the similar word is apointed for word one, the similar word is handbook for word chip, the similar word is chip for word chip, the similar word is controller for word chip, the similar word is cpu for word chip, the similar word is paint for word chip, the similar word is risc step 181000, loss 0.090 step 182000, loss 0.122 step 183000, loss 0.104 step 184000, loss 0.089 step 185000, loss 0.073 step 186000, loss 0.130 step 187000, loss 0.141 step 188000, loss 0.077 step 189000, loss 0.091 step 190000, loss 0.105 for word movie, the similar word is movie for word movie, the similar word is watching for word movie, the similar word is reasearchers for word movie, the similar word is aerialbot for word movie, the similar word is cube for word one, the similar word is one for word one, the similar word is trionfo for word one, the similar word is athlete for word one, the similar word is apointed for word one, the similar word is schwartz for word chip, the similar word is chip for word chip, the similar word is controller for word chip, the similar word is paint for word chip, the similar word is cpu for word chip, the similar word is risc step 191000, loss 0.058 step 192000, loss 0.144 step 193000, loss 0.073 step 194000, loss 0.068 step 195000, loss 0.125 step 196000, loss 0.073 step 197000, loss 0.086 step 198000, loss 0.148 step 199000, loss 0.163 step 200000, loss 0.091 for word movie, the similar word is movie for word movie, the similar word is watching for word movie, the similar word is aerialbot for word movie, the similar word is reasearchers for word movie, the similar word is rephlexions for word one, the similar word is one for word one, the similar word is athlete for word one, the similar word is vaalserberg for word one, the similar word is trionfo for word one, the similar word is passo for word chip, the similar word is chip for word chip, the similar word is controller for word chip, the similar word is paint for word chip, the similar word is api for word chip, the similar word is risc step 201000, loss 0.196 step 202000, loss 0.123 step 203000, loss 0.126 step 204000, loss 0.094

程序需要运行一个小时左右,请耐心等待,从打印结果可以看到,经过一定步骤的训练,Loss逐渐下降并趋于稳定。同时也可以发现skip-gram模型可以学习到一些有趣的语言现象,比如:跟who比较接近的词是"who, he, she, him, himself"。

词向量的有趣应用

在使用word2vec模型的过程中,研究人员发现了一些有趣的现象。比如得到整个词表的word embedding之后,对任意词都可以基于向量乘法计算出跟这个词最接近的词。我们会发现,word2vec模型可以自动学习出一些同义词关系,如:

Top 5 words closest to "beijing" are: 1. newyork 2. paris 3. tokyo 4. berlin 5. seoul ... Top 5 words closest to "apple" are: 1. banana 2. pineapple 3. huawei 4. peach 5. orange

除此以外,研究人员还发现可以使用加减法完成一些基于语言的逻辑推理,如:

Top 1 words closest to "king - man + woman" are 1. queen

...

Top 1 words closest to "captial - china + america" are 1. Washington

还有更多有趣的例子,赶快使用飞桨尝试实现一下吧。