文档简介:

概览

持续构建与发布是企业研发工作中必不可少的一个步骤,目前大多公司都采用 Jenkins 集群来搭建符合需求的 CI/CD 流程,Jenkins的持续发布流程可以跟Kubernetes集群更好的对接,更好的发挥它的部署优势,这篇文档可以指定用户把Jenkins发布流程跟CCE集群集成。

操作步骤

1. 设置 Jenkins 存储目录

在 Kubenetes 环境下所起的应用都是一个个Docker镜像,为了保证应用重启的情况下数据安全,所以需要将Jenkins的数据目录持久化到存储中。这里用的是CCE提供的多种持久化存储之一,方便在Kubernetes环境下应用启动节点转义数据一致。当然也可以选择存储到本地,但是为了保证应用数据一致,需要设置Jenkins固定到某一 Kubernetes节点。

参考 容器引擎CCE-操作指南-存储管理 一节。

选取任意一种方式部署生成PVC,记录下PVC的名字。

2. 部署 Jenkins Server 到 Kubernetes

service-account.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: jenkins

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: jenkins

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/exec"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get","list","watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["watch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: jenkins

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: jenkins

subjects:

- kind: ServiceAccount

name: jenkins

jenkins.yaml

# jenkins

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: jenkins

labels:

name: jenkins

spec:

selector:

matchLabels:

name: jenkins

serviceName: jenkins

replicas: 1

updateStrategy:

type: RollingUpdate

template:

metadata:

name: jenkins

labels:

name: jenkins

spec:

terminationGracePeriodSeconds: 10

serviceAccountName: jenkins

containers:

- name: jenkins

image: hub.baidubce.com/jpaas-public/jenkins-github:v0

imagePullPolicy: Always

ports:

- containerPort: 8080

- containerPort: 50000

resources:

limits:

cpu: 1

memory: 1Gi

requests:

cpu: 0.5

memory: 500Mi

env:

- name: LIMITS_MEMORY

valueFrom:

resourceFieldRef:

resource: limits.memory

divisor: 1Mi

- name: JAVA_OPTS

# value: -XX:+UnlockExperimentalVMOptions -XX:+UseCGroupMemoryLimitForHeap -XX:MaxRAMFraction=1

-XshowSettings:vm -Dhudson.slaves.NodeProvisioner.initialDelay=0 -Dhudson.slaves.NodeProvisioner.MARGIN=50

-Dhudson.slaves.NodeProvisioner.MARGIN0=0.85

value: -Xmx$(LIMITS_MEMORY)m -XshowSettings:vm -Dhudson.slaves.NodeProvisioner.initialDelay=0

-Dhudson.slaves.NodeProvisioner.MARGIN=50 -Dhudson.slaves.NodeProvisioner.MARGIN0=0.85

volumeMounts:

- name: jenkins-home

mountPath: /var/jenkins_home

livenessProbe:

httpGet:

path: /login

port: 8080

initialDelaySeconds: 60

timeoutSeconds: 5

failureThreshold: 12 # ~2 minutes

readinessProbe:

httpGet:

path: /login

port: 8080

initialDelaySeconds: 60

timeoutSeconds: 5

failureThreshold: 12 # ~2 minutes

securityContext:

fsGroup: 1000

volumes:

- name: jenkins-home

persistentVolumeClaim:

claimName: myjenkinspvc

---

apiVersion: v1

kind: Service

metadata:

name: jenkins

spec:

type: NodePort

selector:

name: jenkins

# ensure the client ip is propagated to avoid the invalid crumb issue when using LoadBalancer (k8s >=1.7)

#externalTrafficPolicy: Local

ports:

- name: http

port: 80

targetPort: 8080

protocol: TCP

- name: agent

port: 50000

protocol: TCP

注意:

- jenkins.yaml文件中的字段claimName需要改成1、设置Jenkins存储目录中生成的PVC名字

在CCE Kubernetes集群中执行以下命令

kubectl create -f service-account.yaml

kubectl create -f jenkins.yaml

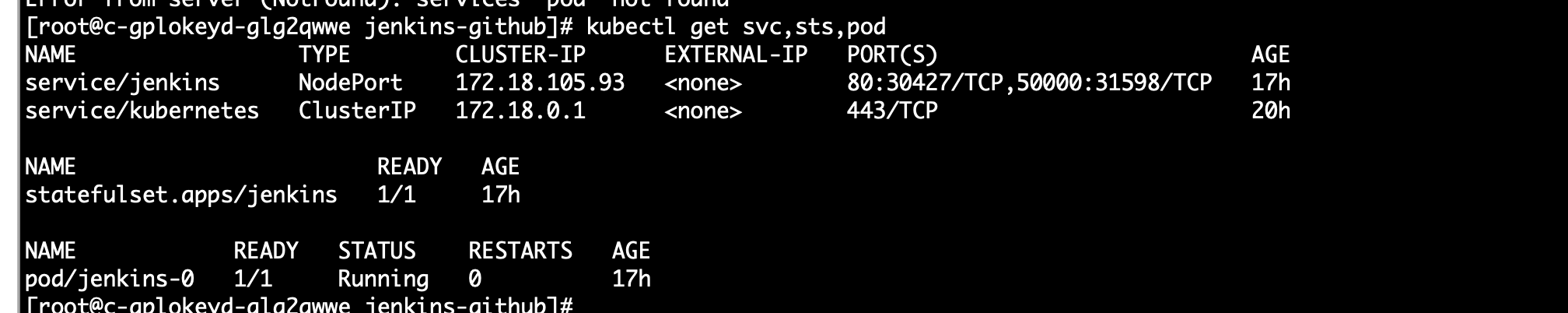

依次生成以下内容代表创建成功

3. 初始化配置 Jenkins

此时Jenkins Master服务已经部署启动起来了,并且将端口暴露到80:30427,50000:31598,此时可以通过浏览器打开http://<Node_IP>:30427访问 Jenkins页面了。

在浏览器上完成Jenkins的初始化插件安装过程,并配置管理员账户信息,这里忽略过程,初始化完成后界面如下:

注意:

- 初始化过程中,让输入 /var/jenkins_home/secret/initialAdminPassword初始密码时,可以直接到挂载的PVC持久化目录里面读取,或者直接进入到容器的内部进行获取

kubectl exec -it jenkins-0 cat /var/jenkins_home/secrets/initialAdminPassword

4. Jenkins 安装 Kubernetes Plugin 插件

管理员账户登录Jenkins Master页面,点击 "系统管理" -> "插件管理" —> "可选插件" —> "Kubernetes" 勾选安装即可。

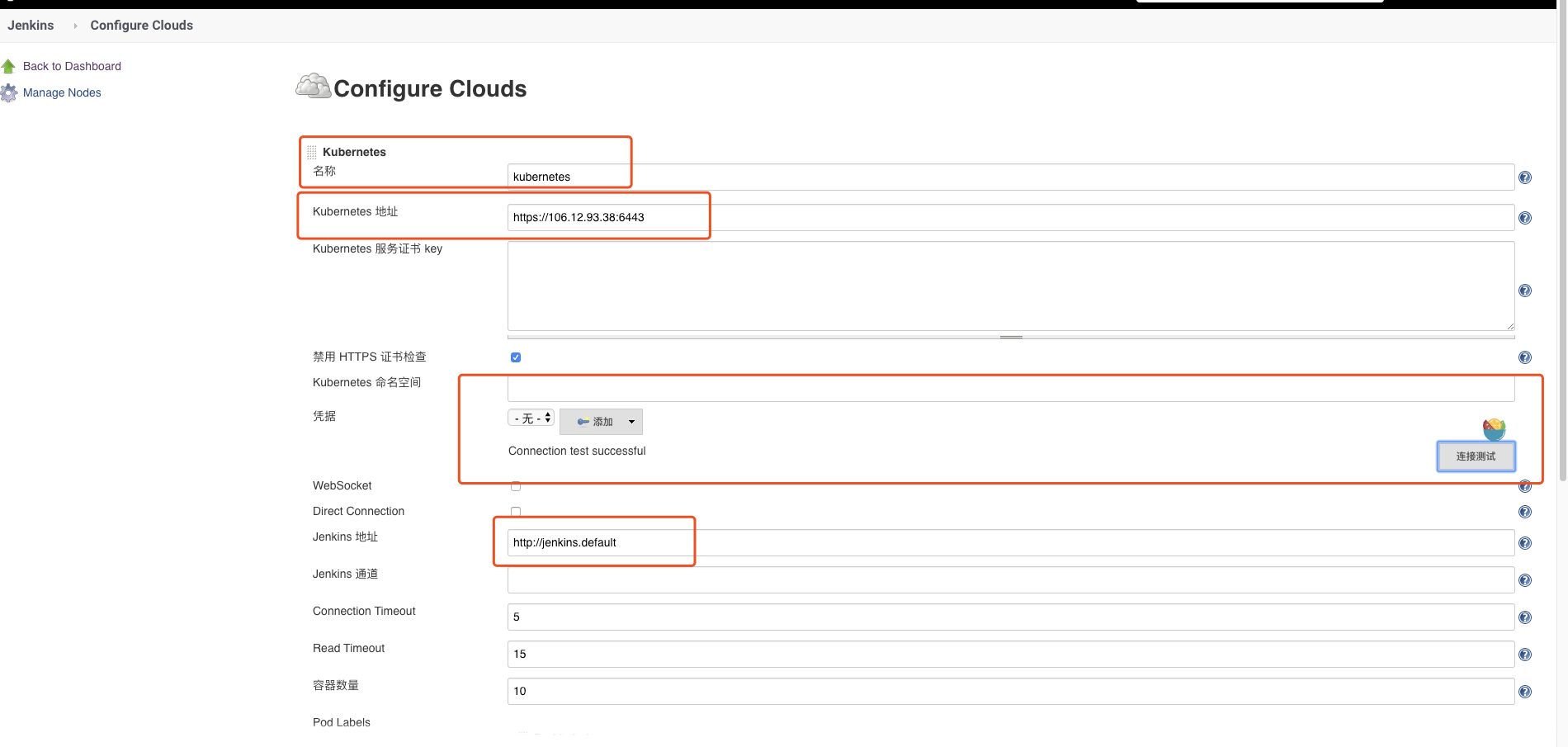

安装完毕后,点击"系统管理" —> "系统设置" —> "新增一个云" —> 选择 "Kubernetes",然后填写Kubernetes和Jenkins配置信息。

说明:

- 1、Name处默认为kubernetes,也可以修改为其他名称,如果这里修改了,下边在执行Job时指定podTemplate()参数cloud为其对应名称,否则会找不到,cloud默认值取:kubernetes

- 2、Kubernetes URL处填写了https://kubernetes.default这里填写了 Kubernetes Service对应的DNS记录,通过该DNS记录可以解析成该Service的Cluster IP。

注意: 也可以填写https://kubernetes.default.svc.cluster.local完整DNS记录,因为它要符合<svc_name>.<namespace_name>.svc.cluster.local的命名方式,或者直接填写外部Kubernetes的地址https://<ClusterIP>:<Ports>。

- 3、Jenkins URL处填写了http://jenkins.default,跟上边类似,也是使用 Jenkins Service对应的DNS记录, 同时也可以用http://<ClusterIP>:<Node_Port>方式,例如我这里可以填http://x.x.x.x:30427也是没有问题的,这里的30427就是对外暴露的NodePort。

- 4、配置完毕,可以点击"Test Connection"按钮测试是否能够连接的到 Kubernetes,如果显示Connection test successful则表示连接成功,配置没有问题

5. 非集群内的 Jenkins 连接 Kubernetes 集群

填写 Kubernetes 配置内容

以一个kubeconfig文件为例

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURwRENDQW95Z0F3SUJBZ0lVUl

dSdmNwRkxNaTVaUFZJUVllL2o2WkxsZlJJd0RRWUpLb1pJaHZjTkFRRUwKQlFBd2FqRUxNQWtHQTFVRUJoTUNRMDR4RURBT0J

nTlZCQWdUQjBKbGFVcHBibWN4RURBT0JnTlZCQWNUQjBKbAphVXBwYm1jeEREQUtCZ05WQkFvVEEyczRjekVVTUJJR0ExVUV

DeE1MWTJ4dmRXUnVZWFJwZG1VeEV6QVJCZ05WCkJBTVRDbXQxWW1WeWJtVjBaWE13SGhjTk1qQXdOVEU0TURjeE5qQXdXaGNO

TWpVd05URTNNRGN4TmpBd1dqQnEKTVFzd0NRWURWUVFHRXdKRFRqRVFNQTRHQTFVRUNCTUhRbVZwU21sdVp6RVFNQTRHQTFVR

UJ4TUhRbVZwU21sdQpaekVNTUFvR0ExVUVDaE1EYXpoek1SUXdFZ1lEVlFRTEV3dGpiRzkxWkc1aGRHbDJaVEVUTUJFR0ExVU

VBeE1LCmEzVmlaWEp1WlhSbGN6Q0NBU0l3RFFZSktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQUtuNmNNMWYKQz

lGMTFVVG1jVFlEQmZJMGlBUnJ2N3RtUEhDNU02NHBQNlViRVhIQ2lMNEFIUEVHK29tdVZKWHZ6MExhNVZRagp2SjZUblc0K

2h4UjNuT2pGSmhGbldVZDcrWUtBM1Fic05qUVNybFNLTVhqRVlTSTA2M3NGS1YzNFZNUERXR3ByClVvVlZWZjduVmVkY0Fh

RTdzeFg3cXFOeERDS3Fjc2cxWCtDNFFrK01zaExKaUdyRnNnRC8rOHNUVkRzVzRoTEMKZ01zZ1R5WlVwRDlmM2hBTXQ0dz

duV3RiWURsOFlvZnhEcU9tYndpVEx3VlNyaXAvZHlVeW9BZXhxbFFWaUgrMwpTNXY5cXRnTXVRRjhUMVNPUkwzcldsMUNYR

0JPU3k0YTVBenA0dUx6TTlnbjJzdzZIU3gySG1aZlpxUlI1SXdICkFnQU9ydStFZ1lXVmNoOENBd0VBQWFOQ01FQXdEZ1l

EVlIwUEFRSC9CQVFEQWdFR01BOEdBMVVkRXdFQi93UUYKTUFNQkFmOHdIUVlEVlIwT0JCWUVGSjBNOXlyc2F5SXEzZnVuYk

hWWHJiZUZZL3RBTUEwR0NTcUdTSWIzRFFFQgpDd1VBQTRJQkFRQkE0UzBoVkpyOTBmNjNPU0tRMzVrUGNvTE9ObTg0TVNUbk

I1OHE3alJHOHBtRHVvc09TUXUwCjZpUE9CZVBMOE92eEpObzhRcHBXOWJCR1N1ZFRKMG5CUnMyTmFjWU1BM0FqeUM0Y09nOF

FJNGxHcUJWQllvVEcKa0I0cVlsYjl4dVJ2bnBJenVUWkd0RkVYQkpkZXFGZzZzSno2THRIZzFiUmM2cGJGZThuVUZZYnRSMz

RPeGl2Qgp5WUNUKzNSbEpxTmhXcjlJK2djNDhMeXNheTBnL3BmQ1lsSzdXQm8rSWFZTXF1Z2Zmc2RQS2dISkU0Tm0xei9pCm

lsNnhYMWV3R1RYMEFsMENKN2tNeitHa3hZalFHcHY1UG5iNzRpOHZZMm5uODVhUnliZVZjeENMZkhTazBTN2kKL0hjTXRkRj

dxMVE5bndqMlhiclMxRmRCTEVSWGFGQTkKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://1.1.1.1:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUsdafZJQ0FURS0tLS0tCUJBZ0lVQlRMK3lWNXdNbjNvamdk

dzRKSmFBa0RpNTZrd0RRWUpLb1pJaHZjTkFRRUwKQlFBd2FqRUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQ

jBKbGFVcHBibWN4RURBT0JnTlZCQWNUQjBKbAphVXBwYm1jeEREQUtCZ05WQkFvVEEyczRjekVVTUJJR0ExVUVDe

E1MWTJ4dmRXUnVZWFJwZG1VeEV6QVJCZ05WCkJBTVRDbXQxWW1WeWJtVjBaWE13SGhjTk1qQXdOVEU0TURj

eE5qQXdXaGNOTXpBd05URTJNRGN4TmpBd1dqQjcKTVFzd0NRWURWUVFHRXdKRFRqRVFNQTRHQTFVRUNCTUhRbVZwU21

sdVp6RVFNQTRHQTFVRUJ4TUhRbVZwU21sdQpaekVYTUJVR0ExVUVDaE1PYzNsemRHVnRPbTFoYzNSbGNuTXhGREFTQmdOVk

JBc1RDMk5zYjNWa2JtRjBhWFpsCk1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrYldsdU1JSUJJakFOQmdr

cWhraUc5dzBCQVFFRkFBT0MKQVE4QU1JSUJDZ0tDQVFFQTErQjh2aXJGU0ZTQVJEQk4yOWxzRHN2WDlxaHJRTU82Wnl

CK0E5bWNybUtQZG5NOQozdU80T3FGQ1RjNktxTVNDQStDUHVKdlQwZXFJM29UUWZRMHZmWW40UndVNFhIZ21IbWQ2

Nk50SXlQZUdzZGtGClRJc1FxaElzVm1VQS9ZNXBEb2h1Yk9OQWlGbDFOaEliUTNqU3JmMy9MYzVLY3RYM2ZOSGhx

OE1hblpYYkVmRUsKY1NsdVI5Um4rQkdET1pubGNMZTkzRWRxME9EeFRsaXV6aFNYektUQUhyZnRLbGlIS3hyVXVk

RlFZcGpHaFgrTgpLT2pRTFAzdkUwNjYzbklibGxZb2trYkxpZUVnd3lkcDNWUFpHSXI2aE5kZnd5bEdpU2lHMmw

N045bG1lVVhoCmt6MjZoWG5OeDZXRzdvL2d1aXBLdUpWeHZmT1NXS0tHWmJyZmhRSURBUUFCbzRISE1JSEVNQT

RHQTFVZER3RUIKL3dRRUF3SUZvREFkQmdOVkhTVUVGakFVQmdnckJnRUZCUWNEQVFZSUt3WUJCUVVIQXdJd0R

BWURWUjBUQVFILwpCQUl3QURBZEJnTlZIUTRFRmdRVVZUQm5ESjJYRUtCMkRRTlJZY2c5SFlsL2VCQXdId1lE

VlIwakJCZ3dGb0FVCm5RejNLdXhySWlyZCs2ZHNkVmV0dDRWaiswQXdSUVlEVlIwUkJENHdQSWNFckJJQUFZY0

VaRURtSUljRVpFYWsKeUljRVpFRG1INGNFWkVha3dZY0VaRURtSG9jRVpFYWt2b2NFWkVEbUlZY0VhZ3hkSm9j

RVpFSUFiREFOQmdrcQpoa2lHOXcwQkFRc0ZBQU9DQVFFQUNFc2JBOWh6cHp6YWdwL2RMemQremx2anJVQ0dqNV

FBQjNmQlN0OEN4Z2loCnhKNzBLeWV5TDdVVExOZDRNd09Tcjd0VGxhMTFmZTE4VkRvRUNDV0NzY0FabXZEK014e

VJ2alFaL1NjY29xMGwKRkc5RFlBU3dOL2RhMURaeTY2L0FJdllaWEV1WmxQbGtSNnlrQ1F4MHJNYjJtdVdSemdJ

TS9MUTNwSFVYenJ4cQo3cUlreEtJeWlHZC8yUDdvcFBtSzMvemJIU2wyVnNnc1pFL2tJb1ZNQ3BYc1NEaml2aEpHN

Ulya1lyamNSa2JzCmV2cDI2b0M0K0lKNkNYNjh0TDROTkFxNzFoRmh1QTJ1TGhVLzFhc3NOY0w4RFpFNnFvTlFpW

UZXQjVheE81NEsKZXlxRkM3dDBTbEVNYTFRVnNlbklwTVlTRmRlYTZselhtN3lXSFVCVEdBPT0KLS0tLS1FTkQgQ

0VSVElGSUNBVEUtLS0tLQo=

client-key-data:LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVkbjRSdlR3Nka0YKVVBL1k1cERvaHViT

05BaUZsMU5oSWJRM2pTcmYzL0xjNUtjdFgzZk5IaHE4TWFuWlhiRWZFSwpjU2x1UjlSbitCR0RPWm5sY0xlOTNF

ZHEwT0R4VGxpdXpoU1h6S1RBSHJmdEtsaUhLeHJVdWRGUVlwakdoWCtOCktPalFMUDN2RTA2NjNuSWJsbFlva

2tiTGllRWd3eWRwM1ZQWkdJcjZoTmRmd3lsR2lTaUcybDQ3TjlsbWVVWGgKa3oyNmhYbk54NldHN28vZ3VpcE

t1SlZ4dmZPU1dLS0daYnJmaFFJREFRQUJBb0lCQUZRVUhlR2ZIT2xYNkFFbQo0eHd1YVZTMTllNGVtRzlJRE

RDZ1NoUkx6Q2RyUWI3N2tXeGZPdUN5Ny9VSDdaOWZzZGU2dlo5RUtkTEhTdm1ICnR3QU5nNktjZXZPR1IvWl

Q0VnpVSnQzTWttT2JiSDJXTGVjcS9wbU9ySEFWdExZTW9rUkR4T1pwK0RkaXlERUEKQ2xoVUZaSW9yQnQyRG

k3OXdQOS9heXFQdWEwSCttK0NpV2JSYzhodkRSZWtIZnd2b2pXTWtzNVNUNkZhSmVzNwpXQW1GNnVTaEs2Y3

ovZjV5QjgvNjVmQ0tvYVN2RFlkdk1HVHZ2U3BqRlBoZVlScHRnREVWd2VJMElaRFBXdDQ4CmpnWklpQjVLb

pOdXprc1l6eEUvWnRhV05PS3QxVmtKYTduQ1M2SitOM0JESDFwOE9QVWk0ZVpDVTRzd05kRVMKVDRoV0ZZRU

NnWUVBOGthR0Y3aGxmSEhBZkwya3MwNUJNb01mTktOUFFkSkxYV01ZUVk0L0FwYWJlaWhOVzZRSwpabDJ1

cjNoRkFZZ3B6ZFp3RkUzbEx5VmhnODZSS05XNVZvSWcwUW5qWEpBc1U5VGt1KzNzVXRqWnFHTkNmdWRHClB

1dG1qWFNwR1NvQWQxZDdwQkNkbEdMYWZBd1ArREI3VkFCNHRTQklVd1NHY3hDb2pUOU92T0VDZ1lFQTVCc

2gKcnpOTHZJcU81MlpyL2xlZ3BWRC96aFhZWmNhaDhQcFFLNUY1VkJNa3MyalRNL25uVXdvYzl1QXllUlYxbm

1lTApNajlXTmI2QkhZNXNRZXZaNUFPQ2pHWGxyL24zd29qSitTTVpyQnlLMVVqdjBVakdXcVlncHBXMUxhM21

MREV5CmpGbDBhcnB5MllRSkxoK1VoU3R0c2ZKU25qdVVmTXJVR3pRUTh5VUNnWUIyRDJmSXAxTE5FYUY3Sis

3YWNZZlQKMVpHZlZQV0tYYS9jRWkzL3hCRndjWFBTVTFGZkZ0RDZrU3hPMVl6SzhrOXN2dEpmRXBaY0l4c2

gzOGRjM3NreQpIcmRmSmpKbEtOeHcvWTE1QnJmaXAwbHBoUFVpWWhFWkdCMGhVWGdWaXlJdkJiSjZnSjVK

Y09LSEVGbTMxK2hCClJ2bUxTZS8waElBQUVsNFFkb2tvQVFLQmdRQzd6Q3FiVjV3UENmUkZSdW02YU9KMX

VJNGlXWkhqbVBsU3NJSzQKbS9oTDQ4YmZmbm9EM01jNmNxVU9DOThDR1V6UXNXYkVZNmpTYnBsV2dCOVkxcG

g1UlBxQ0pKSkpvMzc3eGlxaQoxdWNYOEJmTktWTm45b1ozc3paR2NCTE9ITkhYcUZsNWUxeUJVaWVrTlRSc

HFNNWFKVHNXdWU2VEgzSk1tNkN0CkZOeXZrUUtCZ0VlY05hN2UzNTRJcEtlMGowZ0dzWXZuVXNFOVRKSkRzd

mhjYmRhL0E5TEZvV2tRNGFHVmt2SlgKSG5yb29ZWDg2OU1FM2YyaHNxUmVsMng2a1phZWF6ZWNnWld0RzkvR2k

zWXRqVWJZVktzU2pWZDVhSkFrM2k1cwpEVWRmMFZUU1QrR0hjRDUzL2NkK3M3WnA1WkFzdWx1czdnQmFPWkdzO

Ux6VXY5aHIrRzJWCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

- 序号1:保持不变既可,默认是kubernetes

- 序号2:填写kubeconfig文件中的clusters.cluster.server的地址

- 序号3: 获取kubeconfig文件中的certificate-authority-data的内容并转化成base64 encoded文件

ecbo xxx | base64 -d > /opt/crt/ca.crt

将ca.crt的内容填写到jenkins kubernetes的Kubernetes服务证书key栏中获取

kubeconfig中client-certificate-data和client-key-data的内容并转化成base64 encoded文件

echo xxxxx== | base64 -d > /opt/crt/client.crt echo xxxxx== | base64 -d > /opt/crt/client.key # 生成Client P12认证文件cert.pfx,并下载至本地 openssl pkcs12 -export -out /opt/crt/cert.pfx -inkey /opt/crt/client.key -in /opt/crt/client.crt

-certfile /opt/crt/ca.crt Enter Export Password: Verifying - Enter Export Password: # 注:自定义一个password并牢记

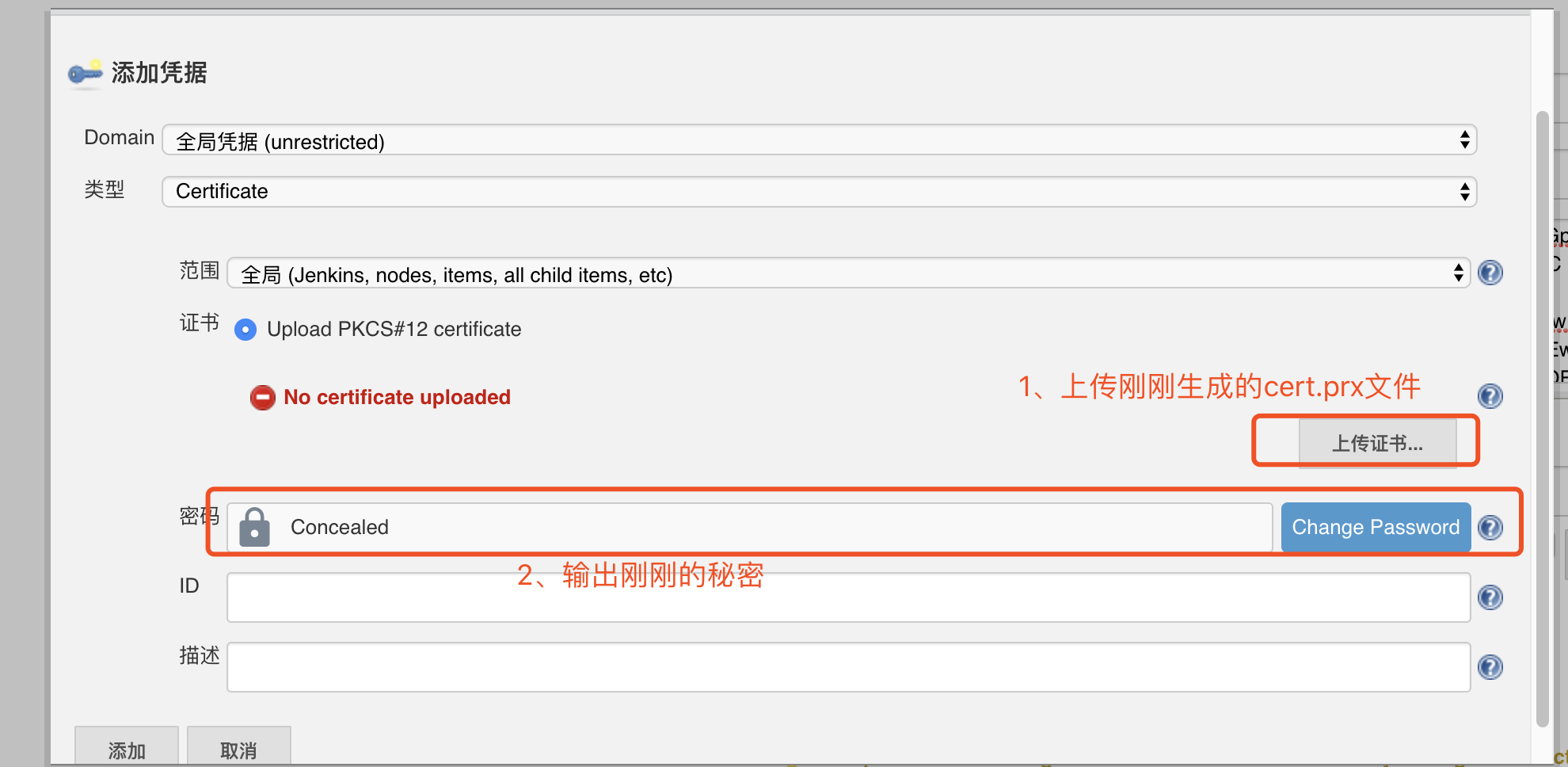

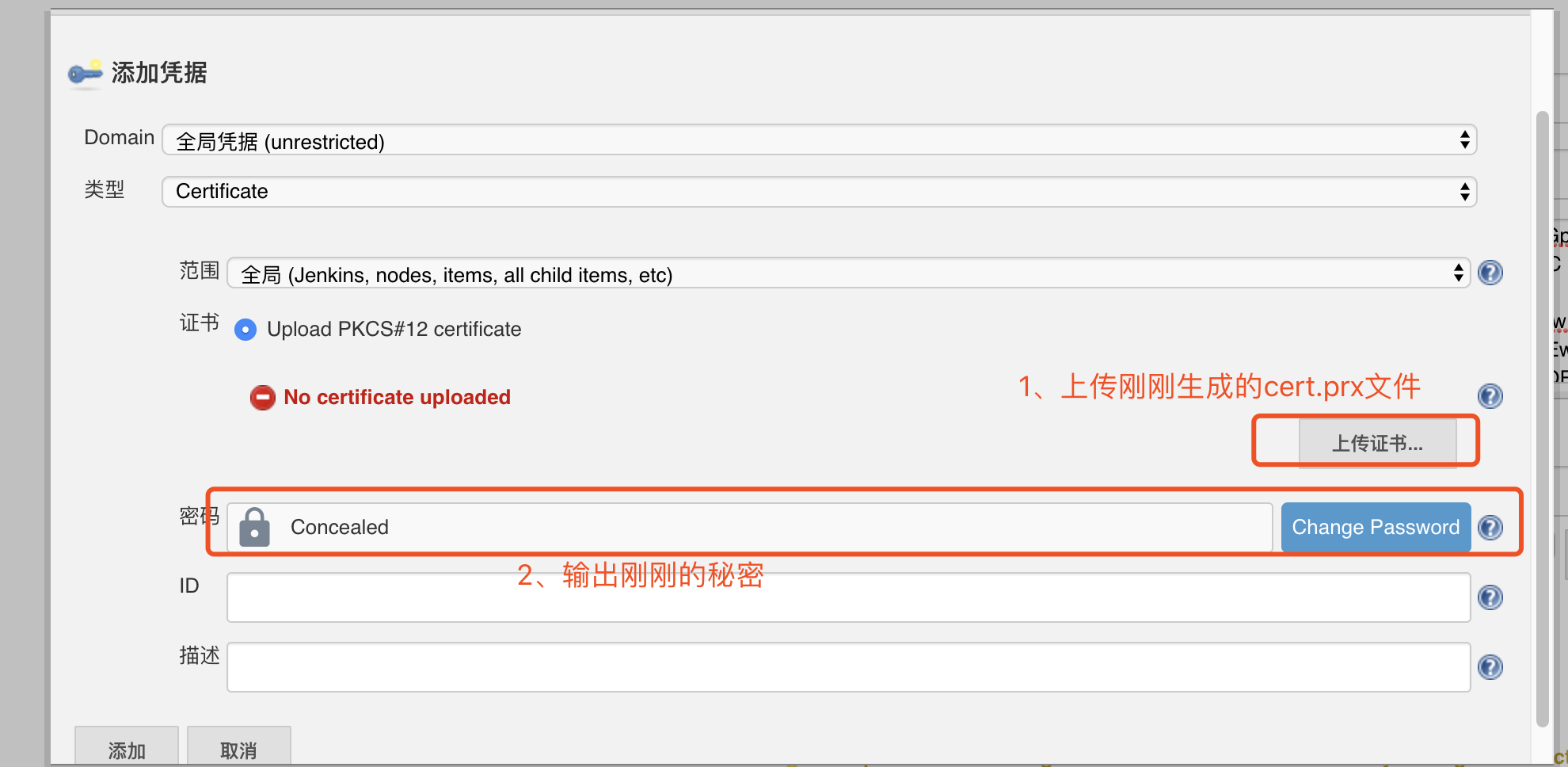

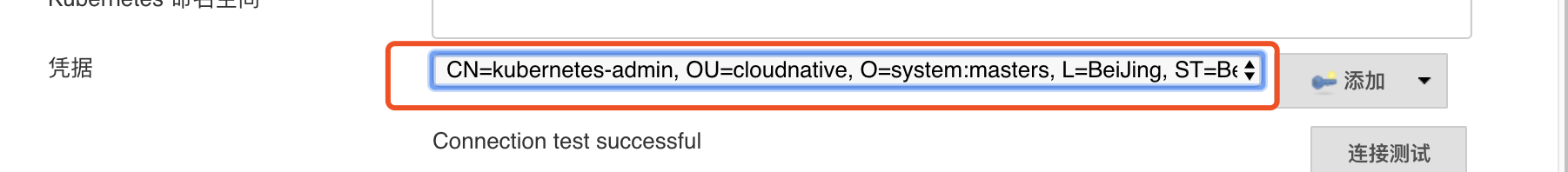

- 序号4: 在云kubernetes中添加凭证

注意: Upload certificate上次刚生成并下载至本地的cert.pfx文件,Password值添加生成cert.pfx文件时输入的密钥,然后在序号4中选择这个证书

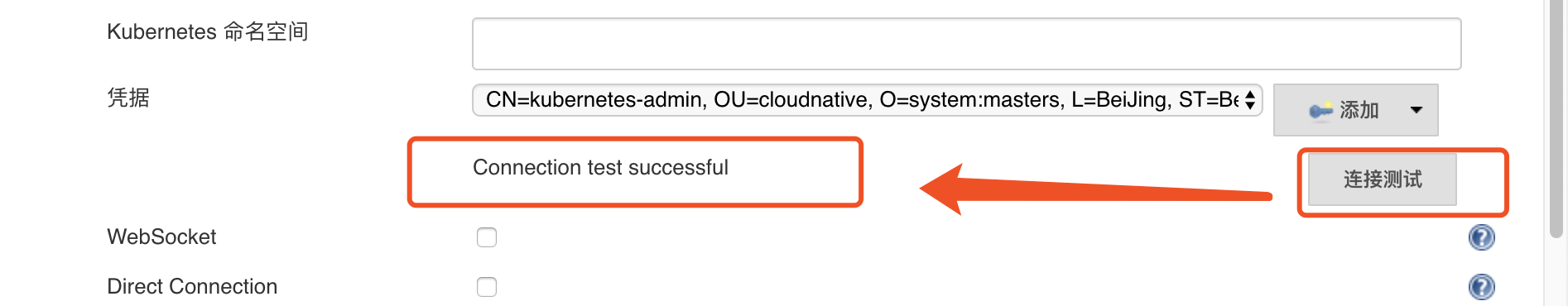

最后点击连接测试:出现代表测试成功

6. 测试并验证

好了,通过Kubernetes安装Jenkins Master完毕并且已经配置好了连接,接下来,我们可以配置 一个Job测试以下是否会成功发布

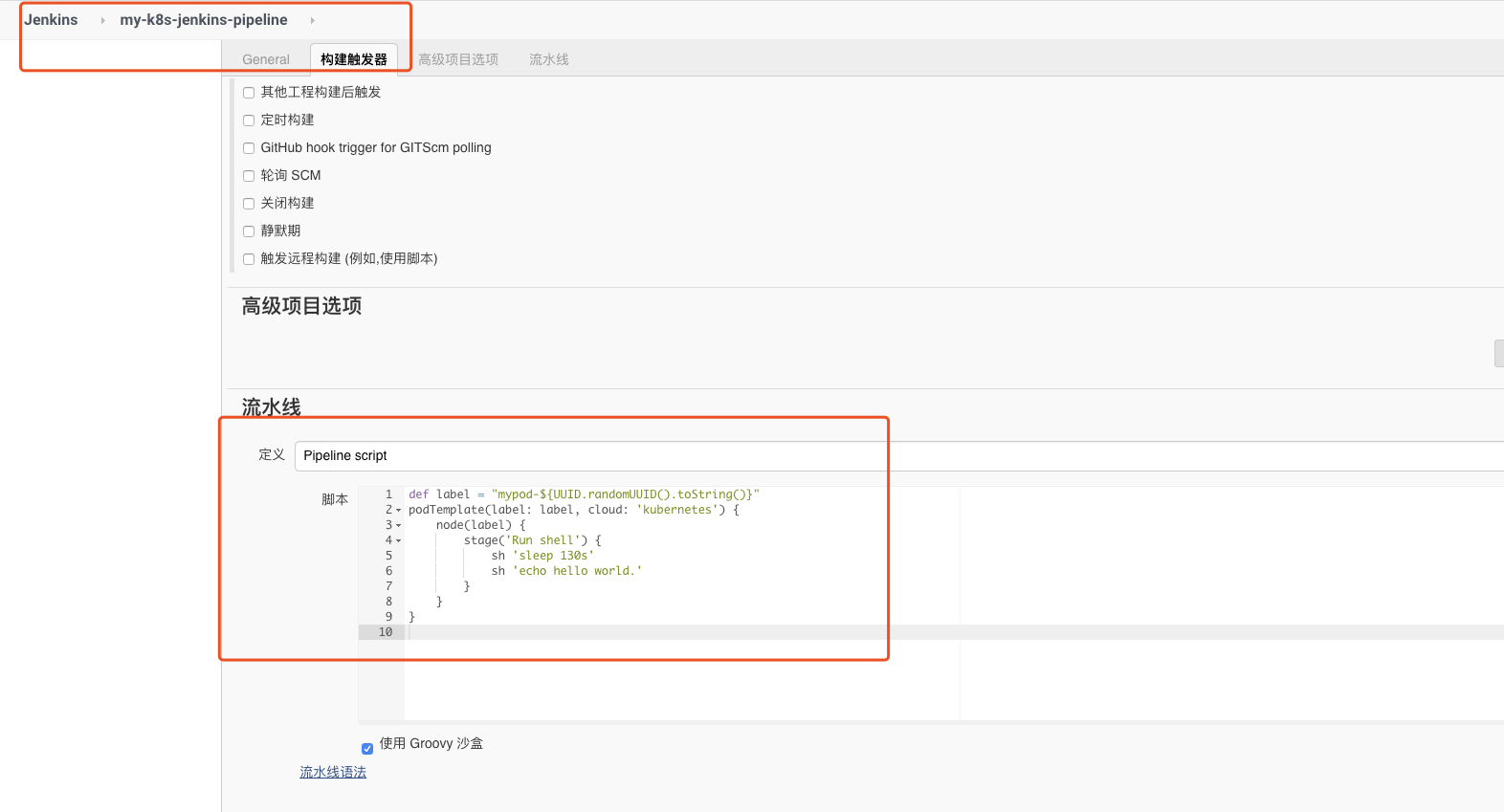

pipeline 类型支持

创建一个Pipeline类型Job并命名为my-k8s-jenkins-pipeline,然后Pipeline脚本处填写一个简单的测试脚本如下:

def label = "mypod-${UUID.randomUUID().toString()}"

podTemplate(label: label, cloud: 'kubernetes') {

node(label) {

stage('Run shell') {

sh 'sleep 130s'

sh 'echo hello world.'

}

}

}

执行构建,此时去构建队列里面,可以看到有一个构建任务,点击立即构建之后,等初始化成功之后,会成功发布,我们通过kubectl命令行,可以看到整个自动创建和删除过程。

注意: 示例中使用的镜像,如果直接拉取会有超时的情况,可以使用以下命令在机器上提前执行

docker pull hub.baidubce.com/jpaas-public/jenkins/jnlp-slave:v0

docker tag hub.baidubce.com/jpaas-public/jenkins/jnlp-slave:v0 jenkins/jnlp-slave:4.0.1-1