文档简介:

在CCE中使用AI Training Operator与Horovod训练框架实现分布式训练的弹性与容错功能。

模型训练是深度学习中重要的环节,模型复杂的训练任务有运行时间长、算力需求大的特征。传统分布式深度学习任务中,一旦提交训练任务,无法在运行中动态调整Workers的数量。通过弹性模型训练,可以为深度学习的模型训练任务提供动态修改Workers数量的能力。同时容错的功能能保证在训练任务异常情况下如节点异常故障导致Pod驱逐,重新为异常的Worker调度新的节点继续执行任务,而不会某因某个Worker异常中断整个训练任务。

环境需求

- CCE中安装AI Training Operator组件。

- 使用Horovod/paddlepaddle作为分布式训练框架。

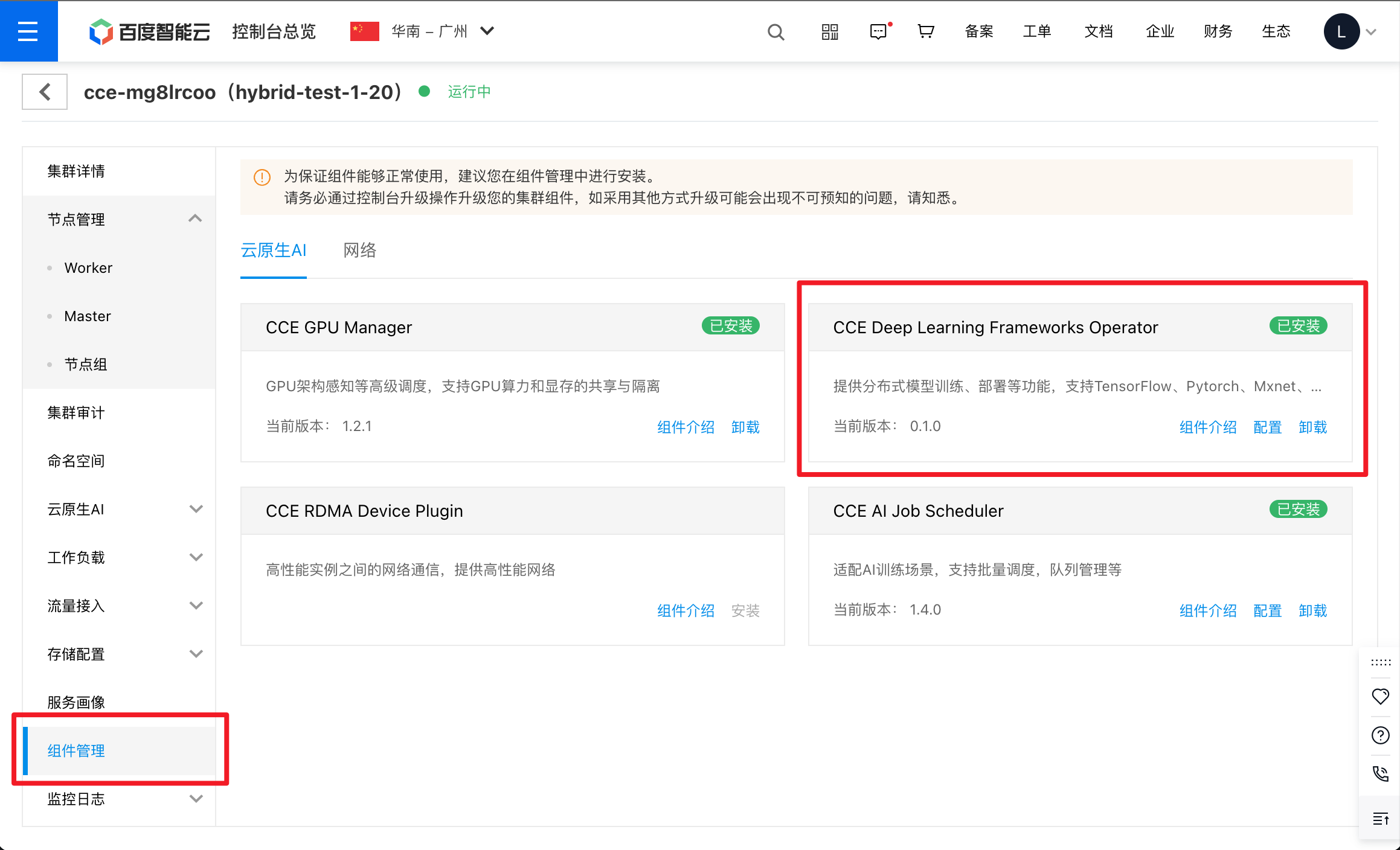

组件安装

- 在CCE控制台安装AITrainingOperator组件。

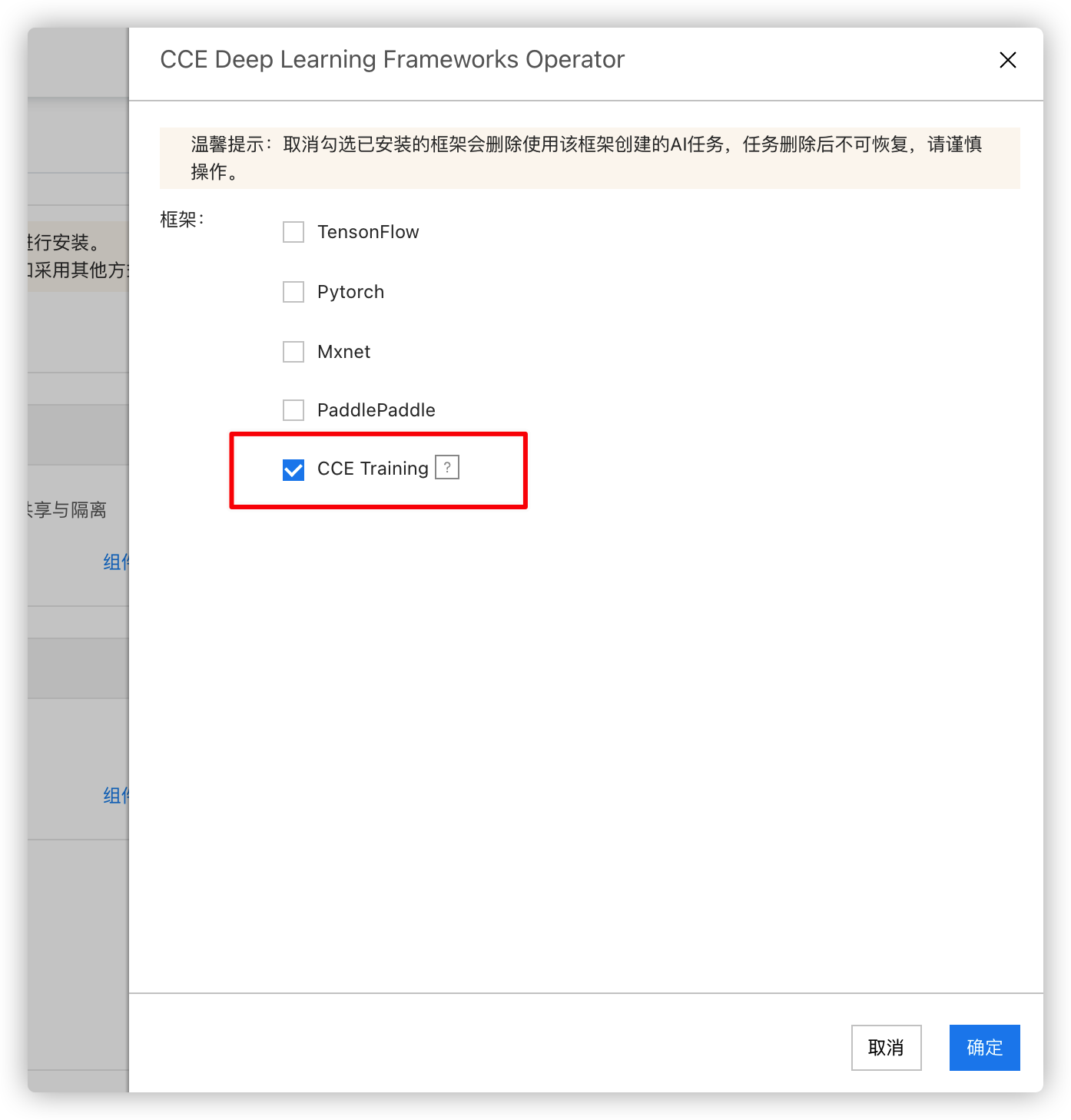

- 勾选CCE Training确认安装。

任务提交

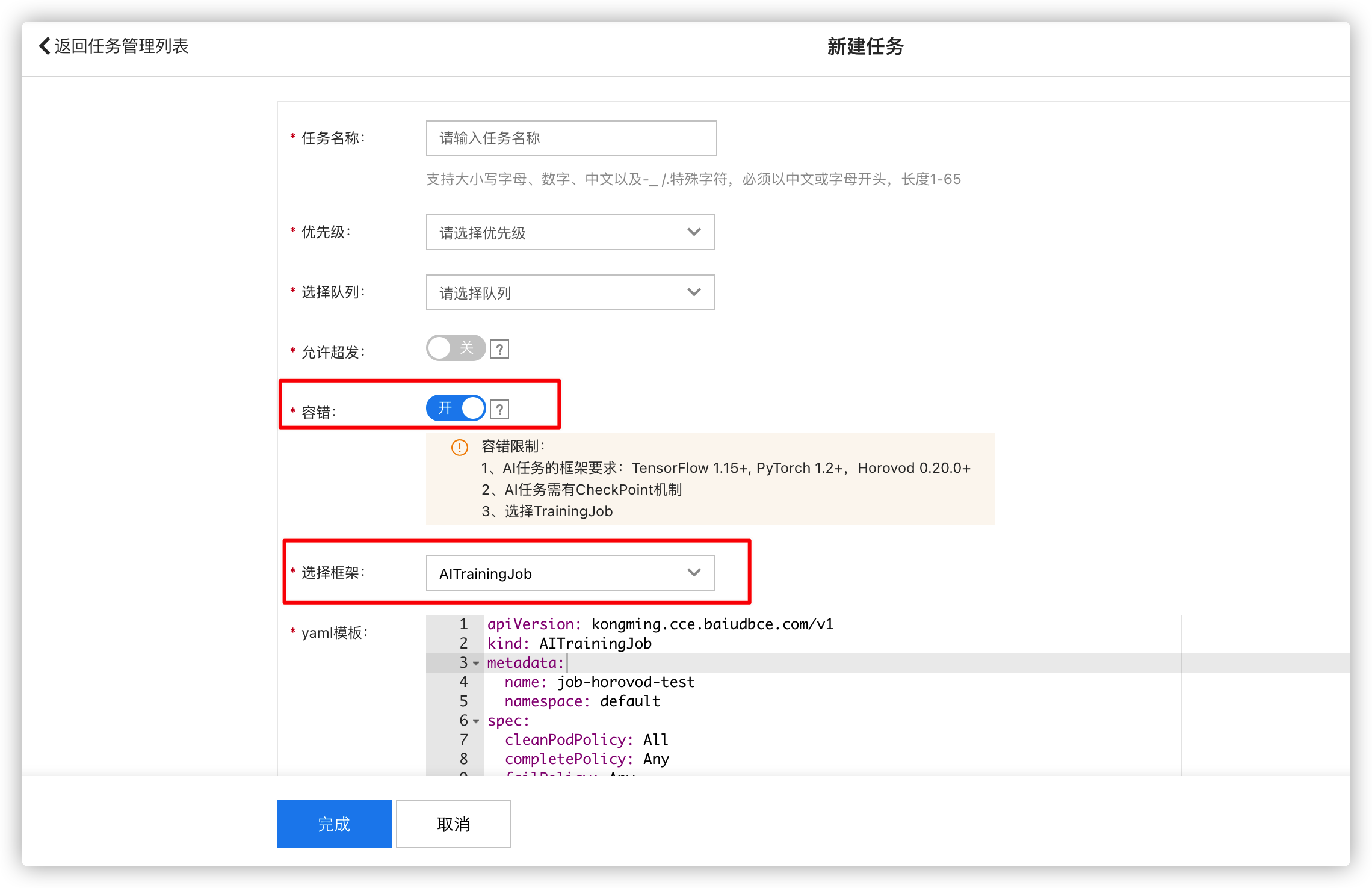

在CCE集群控制台 → 云原生 AI → 任务管理中提交任务,选择框架:AITrainingJob,若需要支持任务容错需要勾选容错功能以开启(弹性任务训练也需开启容错支持)。

生成弹性容错训练任务YAML模版:

apiVersion: kongming.cce.baiudbce.com/v1 kind: AITrainingJob metadata: name: test-horovod-elastic namespace:

default spec: cleanPodPolicy: None completePolicy: Any failPolicy: Any frameworkType: horovod faultTolerant:

true plugin: ssh: - "" discovery: - "" priority: normal replicaSpecs: launcher: completePolicy: All failPolicy:

Any faultTolerantPolicy: - exitCodes: 129,101 restartPolicy: ExitCode restartScope: Pod - exceptionalEvent:

nodeNotReady restartPolicy: OnNodeFail restartScope: Pod maxReplicas: 1 minReplicas: 1 replicaType: master

replicas: 1 restartLimit: 100 restartPolicy: OnNodeFailWithExitCode restartScope: Pod restartTimeLimit:

60 restartTimeout: 864000 template: metadata: creationTimestamp: null spec: initContainers: - args: -

--barrier_roles=trainer - --incluster - --name=$(TRAININGJOB_NAME) - --namespace=$(TRAININGJOB_NAMESPACE)

- --dns_check_svc=kube-dns image: registry.baidubce.com/cce-plugin-dev/jobbarrier:v0.9-1 imagePullPolicy:

IfNotPresent name: job-barrier restartPolicy: Never schedulerName: volcano terminationMessagePath: /

dev/termination-log terminationMessagePolicy: File securityContext: {} containers: - command: - /bin/bash

- -c - export HOROVOD_GLOO_TIMEOUT_SECONDS=300 && horovodrun -np 3 --min-np=1 --max-np=5 --verbose

--log-level=DEBUG --host-discovery-script /etc/edl/discover_hosts.sh python /horovod/examples/elastic/

pytorch/pytorch_synthetic_benchmark_elastic.py --num-iters=1000 env: image: registry.baidubce.com/

cce-plugin-dev/horovod:master-0.2.0 imagePullPolicy: Always name: aitj-0 resources: limits: cpu:

"1" memory: 1Gi requests: cpu: "1" memory: 1Gi volumeMounts: - mountPath: /dev/shm name:

cache-volume dnsPolicy: ClusterFirstWithHostNet terminationGracePeriodSeconds: 30 volumes:

- emptyDir: medium: Memory sizeLimit: 1Gi name: cache-volume trainer: completePolicy: None

failPolicy: None faultTolerantPolicy: - exceptionalEvent: "nodeNotReady,PodForceDeleted" restartPolicy:

OnNodeFail restartScope: Pod maxReplicas: 5 minReplicas: 1 replicaType: worker replicas: 3 restartLimit:

100 restartPolicy: OnNodeFailWithExitCode restartScope: Pod restartTimeLimit: 60 restartTimeout: 864000

template: metadata: creationTimestamp: null spec: containers: - command: - /bin/bash - -c - /usr/sbin/sshd

&& sleep infinity image: registry.baidubce.com/cce-plugin-dev/horovod:master-0.2.0 imagePullPolicy:

Always name: aitj-0 env: - name: NVIDIA_DISABLE_REQUIRE value: "true" - name: NVIDIA_VISIBLE_DEVICES value:

"all" - name: NVIDIA_DRIVER_CAPABILITIES value: "all" resources: limits: baidu.com/v100_32g_cgpu: "1"

baidu.com/v100_32g_cgpu_core: "20" baidu.com/v100_32g_cgpu_memory: "4" requests: baidu.com/v100_32g_cgpu:

"1" baidu.com/v100_32g_cgpu_core: "20" baidu.com/v100_32g_cgpu_memory: "4" volumeMounts: - mountPath:

/dev/shm name: cache-volume dnsPolicy: ClusterFirstWithHostNet terminationGracePeriodSeconds: 300 volumes:

- emptyDir: medium: Memory sizeLimit: 1Gi name: cache-volume schedulerName: volcano

指定3个Worker并提交运行:

NAME READY STATUS RESTARTS AGE

test-horovod-elastic-launcher-vwvb8-0 0/1 Init:0/1 0 6s

test-horovod-elastic-trainer-q7gmp-0 1/1 Running 0 7s

test-horovod-elastic-trainer-spkb8-1 1/1 Running 0 7s

test-horovod-elastic-trainer-sxf6s-2 1/1 Running 0 7s

弹性场景

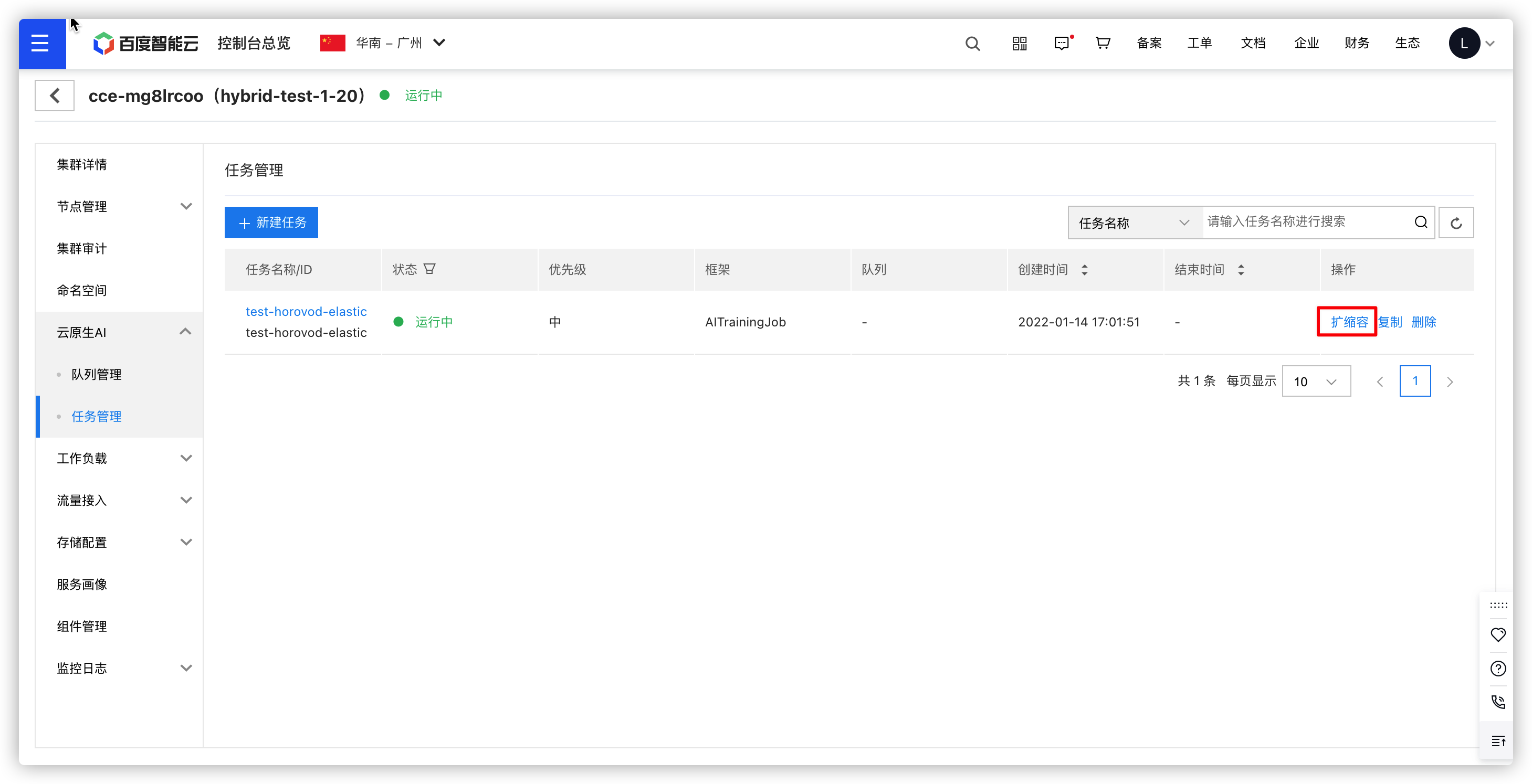

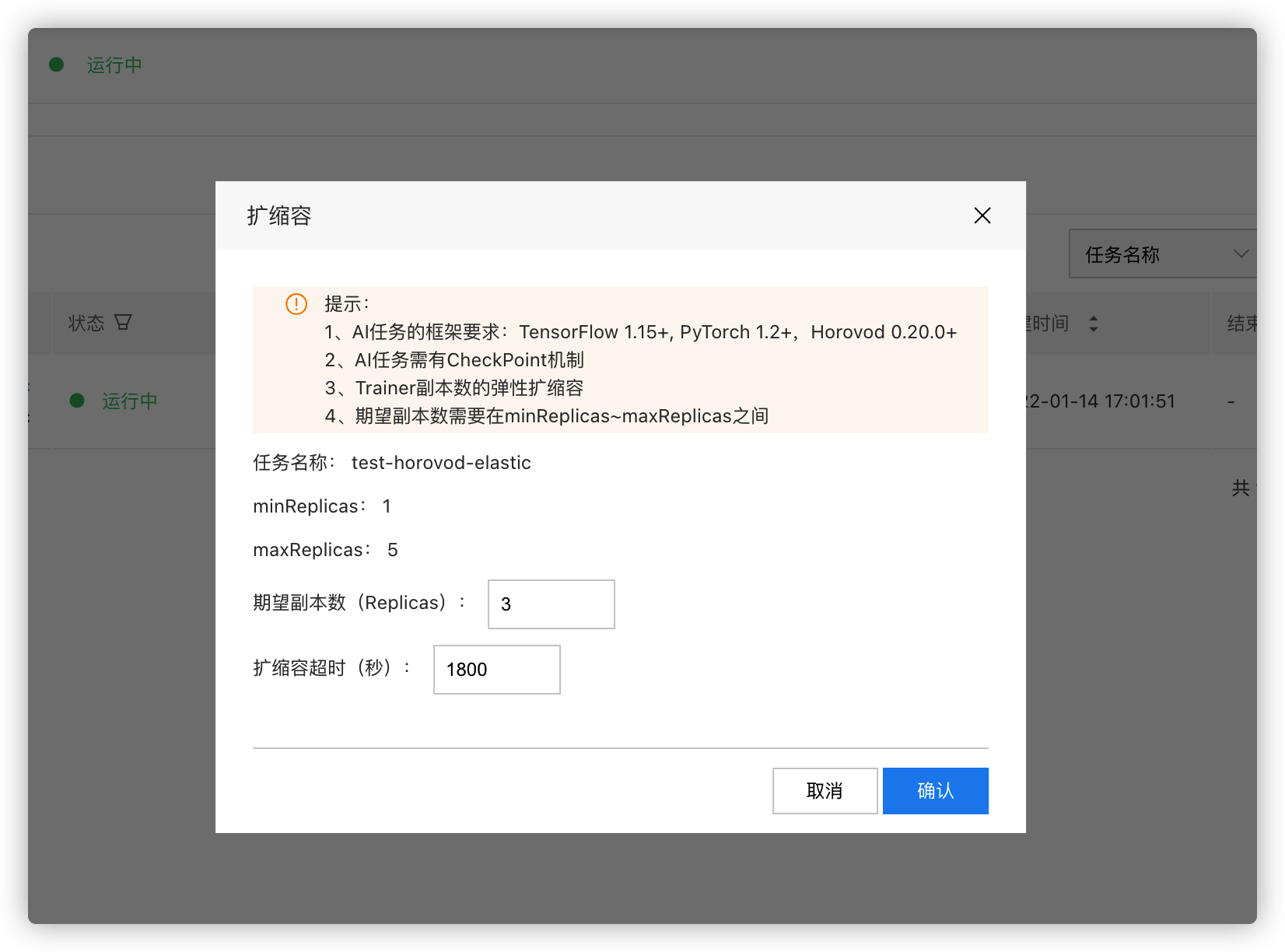

在CCE控制台中对正在运行的训练任务动态修改Worker数量,并指定扩容超时时间。

或直接在集群中修改此CR的YAML,修改spec.replicaSpecs.trainer.replicas的值指定期望Worker数量来执行弹性。

可以看到有相应的扩容事件,集群中也创建了新的Worker Pod加入运行。

status: RestartCount: trainer: 0 conditions: - lastProbeTime: "2022-01-14T09:01:52Z" lastTransitionTime:

"2022-01-14T09:01:52Z" message: all pods are waiting for scheduling reason: TrainingJobPending status:

"False" type: Pending - lastProbeTime: "2022-01-14T09:01:53Z" lastTransitionTime: "2022-01-14T09:01:53Z"

message: pods [test-horovod-elastic-launcher-vk9c2-0] creating containers reason: TrainingJobCreating status:

"False" type: Creating - lastProbeTime: "2022-01-14T09:02:27Z" lastTransitionTime: "2022-01-14T09:02:27Z"

message: all pods are running reason: TrainingJobRunning status: "False" type: Running - lastProbeTime:

"2022-01-14T09:06:16Z" lastTransitionTime: "2022-01-14T09:06:16Z" message: trainingJob

default/test-horovod-elastic scaleout Operation scaleout scale num 1 scale pods [test-horovod-elastic-trainer-vdkk6-3], replicas name trainer job version 1 status: "False" type: Scaling - lastProbeTime: "2022-01-14T09:06:20Z"

lastTransitionTime: "2022-01-14T09:06:20Z" message: all pods are running reason:

TrainingJobRunning status: "True" type: Running

NAME READY STATUS RESTARTS AGE

test-horovod-elastic-launcher-vk9c2-0 1/1 Running 0 7m4s

test-horovod-elastic-trainer-4zzk4-0 1/1 Running 0 7m5s

test-horovod-elastic-trainer-b5rc2-2 1/1 Running 0 7m5s

test-horovod-elastic-trainer-kdjq2-1 1/1 Running 0 7m5s

test-horovod-elastic-trainer-vdkk6-3 1/1 Running 0 2m40s

容错场景

在CCE中创建训练任务并开启容错后,会在提交的YAML的 faultTolorencePolicy 字段中指定容错策略如下:

faultTolerantPolicy: - exceptionalEvent: nodeNotReady,PodForceDeleted restartPolicy: OnNodeFail restartScope: Pod

当Pod因指定退出码异常退出、节点NotReady造成的Pod驱逐、Pod被强行删除场景时,Operator会自动拉起新的训练Pod替代错误的Pod继续完成训练任务。

如当强行删除一个Pod后,最终会创建新的Pod补充进来恢复原本的4个训练实例:

➜ kubectl get pods -w

NAME READY STATUS RESTARTS AGE

test-horovod-elastic-launcher-vk9c2-0 1/1 Running 0 7m59s

test-horovod-elastic-trainer-4zzk4-0 1/1 Terminating 0 8m

test-horovod-elastic-trainer-b5rc2-2 1/1 Running 0 8m

test-horovod-elastic-trainer-kdjq2-1 1/1 Running 0 8m

test-horovod-elastic-trainer-vdkk6-3 1/1 Running 0 3m35s

test-horovod-elastic-trainer-4zzk4-0 0/1 Terminating 0 8m7s

test-horovod-elastic-trainer-4zzk4-0 0/1 Terminating 0 8m8s

test-horovod-elastic-trainer-4zzk4-0 0/1 Terminating 0 8m8s

test-horovod-elastic-trainer-htbz4-0 0/1 Pending 0 0s

test-horovod-elastic-trainer-htbz4-0 0/1 Pending 0 1s

test-horovod-elastic-trainer-htbz4-0 0/1 Pending 0 1s

test-horovod-elastic-trainer-htbz4-0 0/1 Pending 0 1s

test-horovod-elastic-trainer-htbz4-0 0/1 ContainerCreating 0 1s

test-horovod-elastic-trainer-htbz4-0 1/1 Running 0 3s