文档简介:

关键概念

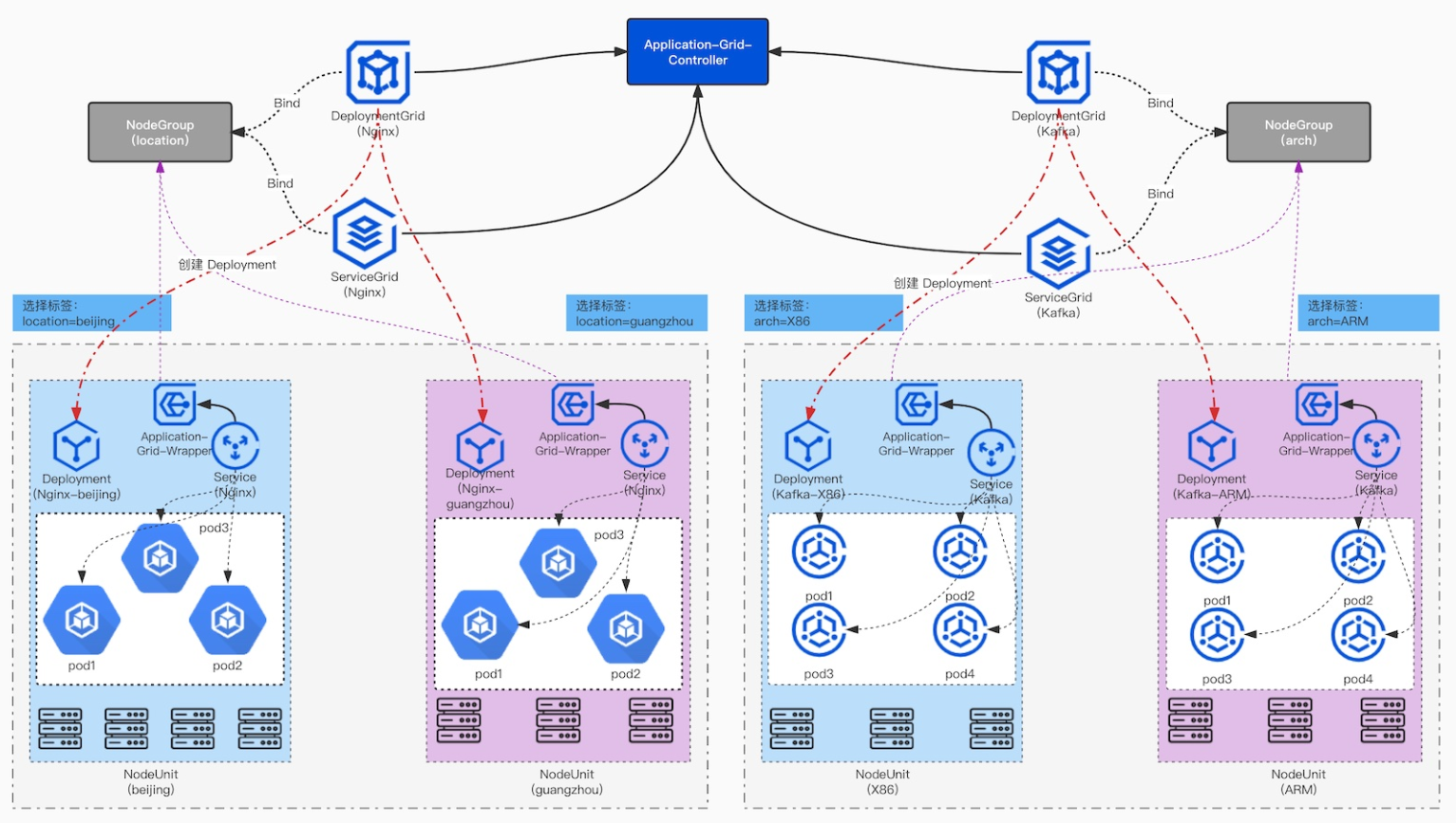

整体架构

基本概念

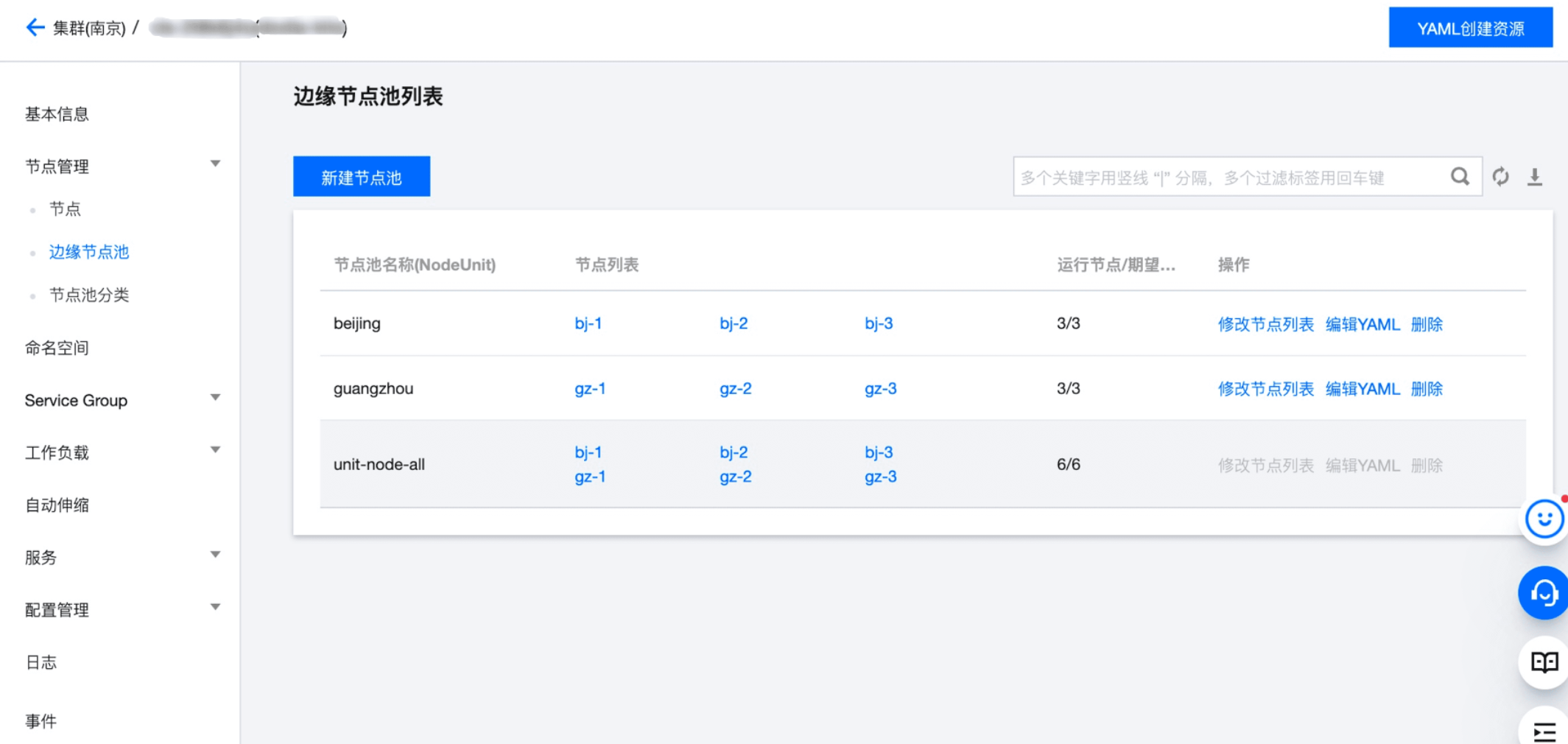

NodeUnit(边缘节点池)

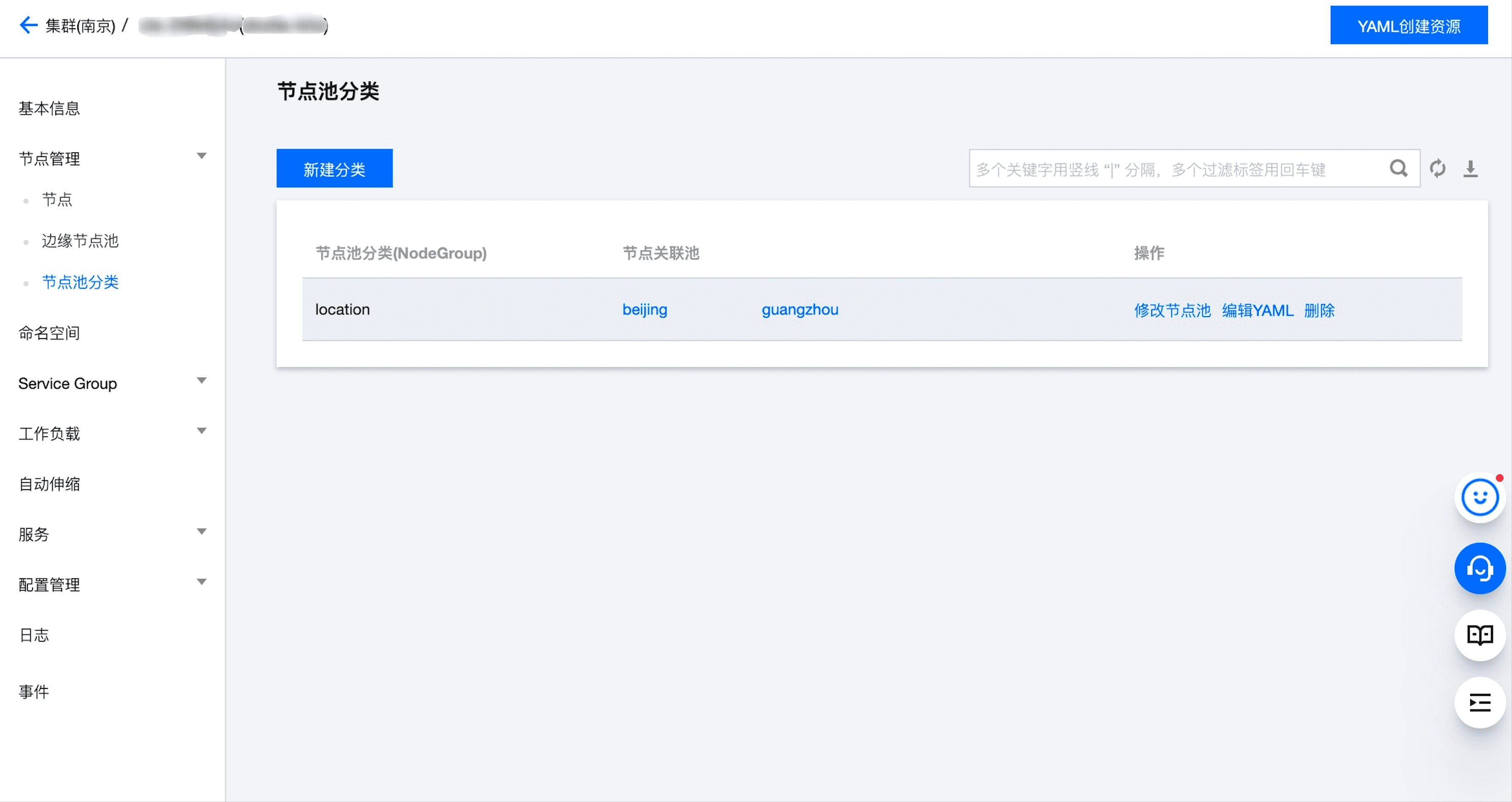

NodeGroup(边缘节点池分类)

ServiceGroup

apiVersion: superedge.io/v1kind: DeploymentGridmetadata:name:namespace:spec:gridUniqKey: <NodeLabel Key><deployment-template>

apiVersion: superedge.io/v1kind: StatefulSetGridmetadata:name:namespace:spec:gridUniqKey: <NodeLabel Key><statefulset-template>

apiVersion: superedge.io/v1kind: ServiceGridmetadata:name:namespace:spec:gridUniqKey: <NodeLabel Key><service-template>

操作步骤

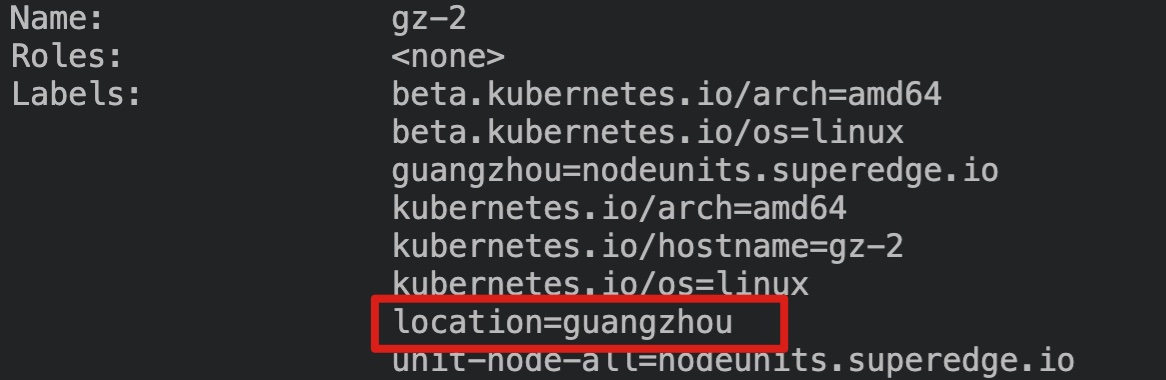

确定 ServiceGroup 唯一标识

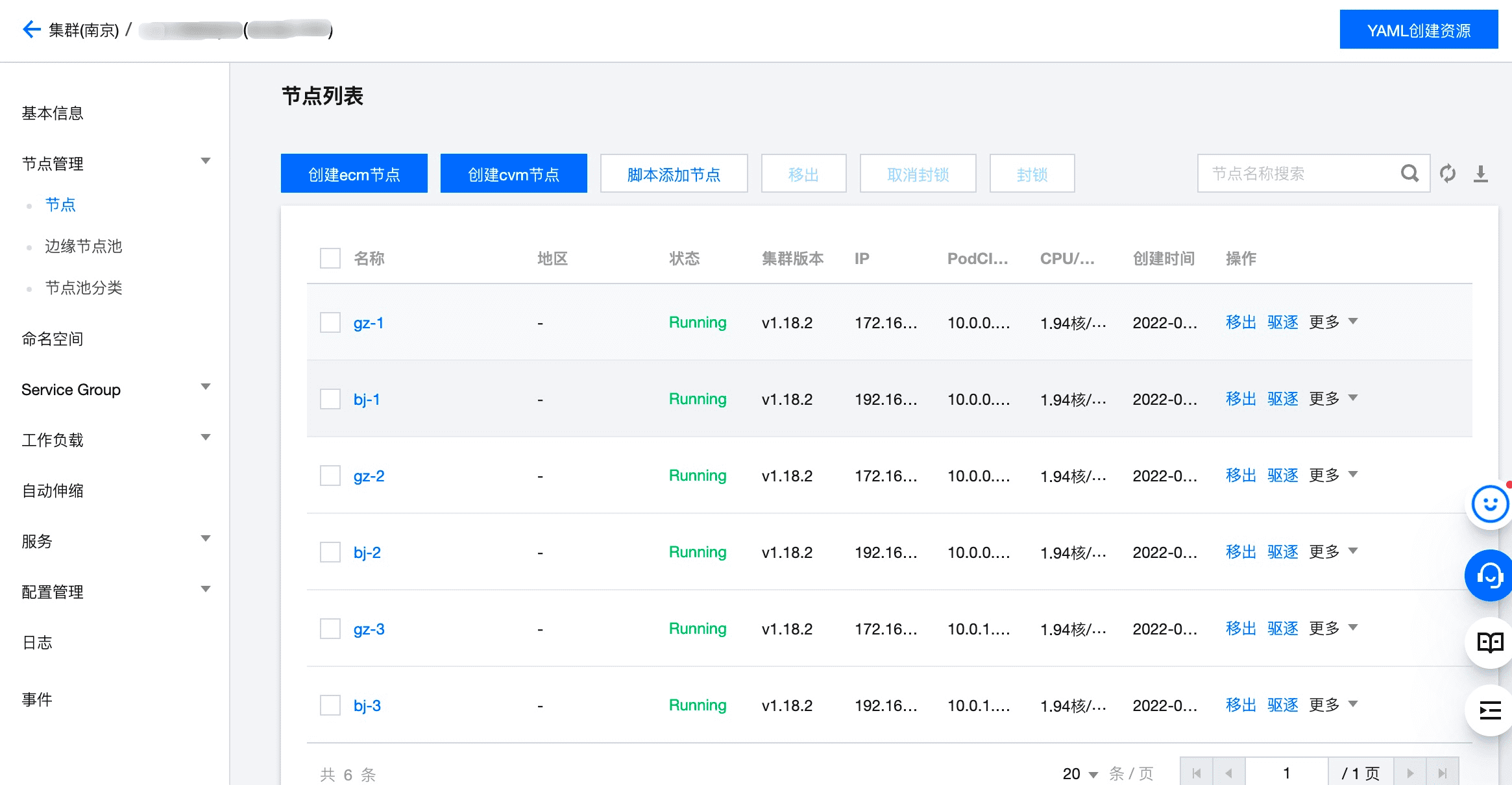

将边缘节点分组

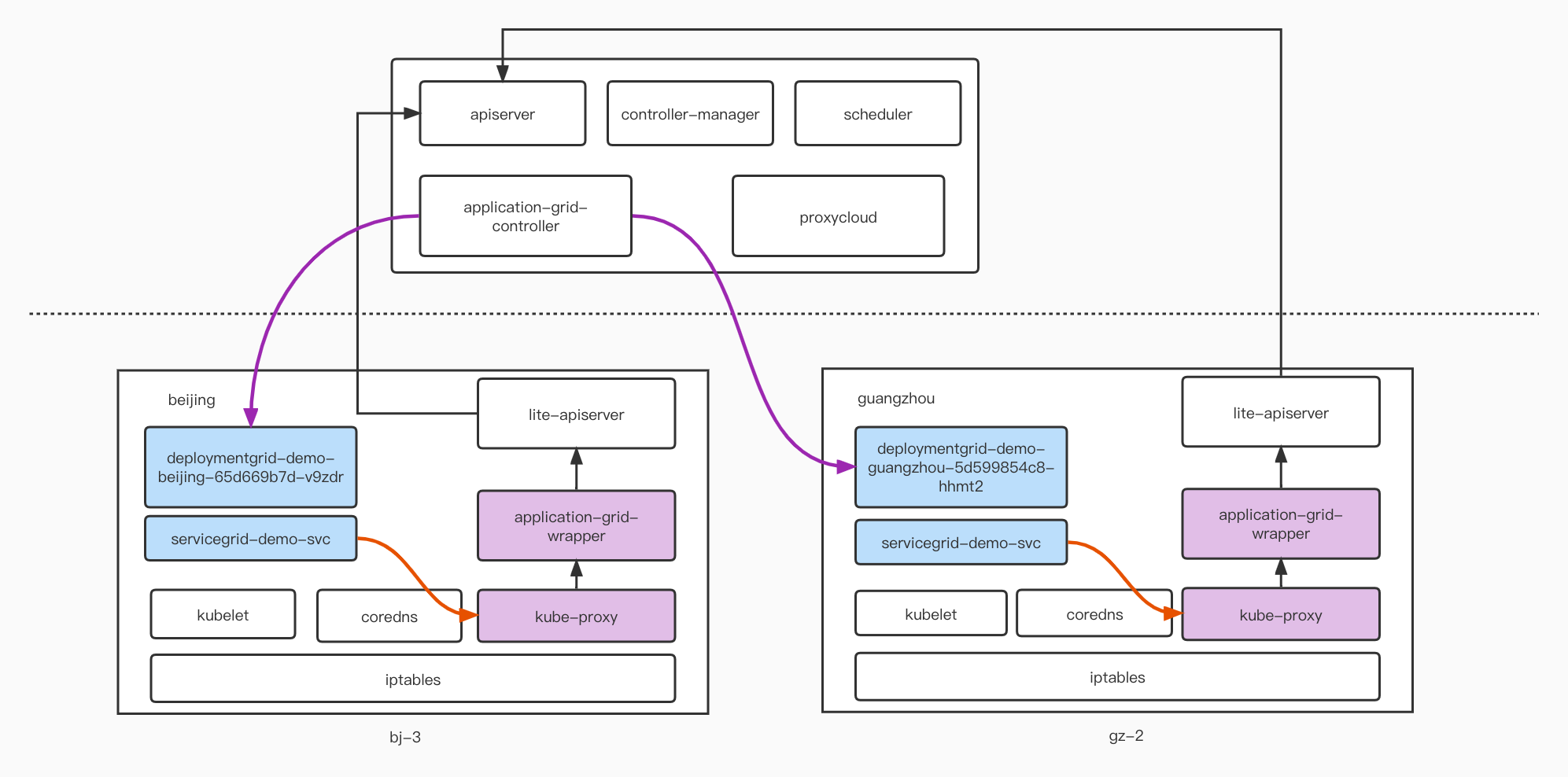

无状态 ServiceGroup

部署 DeploymentGrid

apiVersion: superedge.io/v1kind: DeploymentGridmetadata:name: deploymentgrid-demonamespace: defaultspec:gridUniqKey: locationtemplate:replicas: 2selector:matchLabels:appGrid: echostrategy: {}template:metadata:creationTimestamp: nulllabels:appGrid: echospec:containers:- image: superedge/echoserver:2.2name: echoports:- containerPort: 8080protocol: TCPenv:- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPresources: {}

部署 ServiceGrid

apiVersion: superedge.io/v1kind: ServiceGridmetadata:name: servicegrid-demonamespace: defaultspec:gridUniqKey: locationtemplate:selector:appGrid: echoports:- protocol: TCPport: 80targetPort: 8080

[~]# kubectl get dgNAME AGEdeploymentgrid-demo 9s[~]# kubectl get deploymentNAME READY UP-TO-DATE AVAILABLE AGEdeploymentgrid-demo-beijing 2/2 2 2 14sdeploymentgrid-demo-guangzhou 2/2 2 2 14s[~]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP

NODE NOMINATED NODE READINESS GATES

deploymentgrid-demo-beijing-65d669b7d-v9zdr 1/1 Running 0 6m51s 10.0.1.72 bj-3 <none> <none>deploymentgrid-demo-beijing-65d669b7d-wrx7r 1/1 Running 0 6m51s 10.0.0.70 bj-1 <none> <none>deploymentgrid-demo-guangzhou-5d599854c8-hhmt2 1/1 Running 0 6m52s 10.0.0.139 gz-2 <none> <none>deploymentgrid-demo-guangzhou-5d599854c8-k9gc7 1/1 Running 0 6m52s 10.0.1.8 gz-3 <none> <none>#从上面可以看到,对于一个 deploymentgrid,会分别在每个 nodeunit 下创建一个

deployment,他们会通过 <deployment>-<nodeunit>名称的方式来区分

[~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 172.16.33.1 <none> 443/TCP 47hservicegrid-demo-svc ClusterIP 172.16.33.231 <none> 80/TCP 3s[~]# kubectl describe svc servicegrid-demo-svcName: servicegrid-demo-svcNamespace: defaultLabels: superedge.io/grid-selector=servicegrid-demosuperedge.io/grid-uniq-key=locationAnnotations: topologyKeys: ["location"]Selector: appGrid=echoType: ClusterIPIP Families: <none>IP: 172.16.33.231IPs: <none>Port: <unset> 80/TCPTargetPort: 8080/TCPEndpoints: 10.0.0.139:8080,10.0.0.70:8080,10.0.1.72:8080 + 1 more...Session Affinity: NoneEvents: <none>#从上面可以看到,对于一个 servicegrid,都会创建一个 <servicename>-svc 的标准 Service;#!!!注意,这里的 Service 对应的后端 Endpoint 仍然为所有 pod 的 endpoint 地址,这里并不会按照 nodeunit 进行 endpoint 筛选# 在 guangzhou 地域的 pod 内执行下面的命令[~]# curl 172.16.33.231|grep "node name"node name: gz-2...# 这里会随机返回 gz-2 或者 gz-3 的 node 名称,并不会跨 NodeUnit 访问到 bj-1 或者 bj-3# 在 beijing 地域的 pod 执行下面的命令[~]# curl 172.16.33.231|grep "node name"node name: bj-3# 这里会随机返回 bj-1 或者 bj-3 的 node 名称,并不会跨 NodeUnit 访问到 gz-2 或者 gz-3

原理解析

-A KUBE-SERVICES -d 172.16.33.231/32 -p tcp -m comment --comment

"default/servicegrid-demo-svc: cluster IP" -m tcp --dport 80 -j KUBE-SVC-MLDT4NC26VJPGLP7

-A KUBE-SVC-MLDT4NC26VJPGLP7 -m comment --comment "default/servicegrid-demo-svc:"

-m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-VB3QR2E2PUKDLHCW

-A KUBE-SVC-MLDT4NC26VJPGLP7 -m comment --comment "default/servicegrid-demo-svc:" -j KUBE-SEP-U5ZEIIBVDDGER3DI-A KUBE-SEP-U5ZEIIBVDDGER3DI -p tcp -m comment --comment

"default/servicegrid-demo-svc:" -m tcp -j DNAT --to-destination 10.0.1.72:8080

-A KUBE-SEP-VB3QR2E2PUKDLHCW -p tcp -m comment --comment

"default/servicegrid-demo-svc:" -m tcp -j DNAT --to-destination 10.0.0.70:8080

apiVersion: superedge.io/v1kind: ServiceGridmetadata:name: servicegrid-demonamespace: defaultspec:gridUniqKey: locationtemplate:clusterIP: Noneselector:appGrid: echoports:- protocol: TCPport: 8080targetPort: 8080

Name: servicegrid-demo-svcNamespace: defaultLabels: superedge.io/grid-selector=servicegrid-demosuperedge.io/grid-uniq-key=locationAnnotations: topologyKeys: ["location"]Selector: appGrid=echoType: ClusterIPIP Families: <none>IP: NoneIPs: <none>Port: <unset> 8080/TCPTargetPort: 8080/TCPEndpoints: 10.0.0.139:8080,10.0.0.70:8080,10.0.1.72:8080 + 1 more...Session Affinity: NoneEvents: <none

[~]# nslookup servicegrid-demo-svc.default.svc.cluster.localServer: 169.254.20.11Address: 169.254.20.11#53Name: servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.1.8Name: servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.1.72Name: servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.0.70Name: servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.0.139

通过上述的分析可得:如果访问 Service 的行为会通过kube-proxy的 iptables 规则去进行转发,

同时 Service 是 SerivceGrid 类型,会被application-grid-wrapper监听的话,就可以实现区域流量闭环;

如果是通过 DNS 获取的实际 Endpoint IP 地址,就无法实现流量闭环。

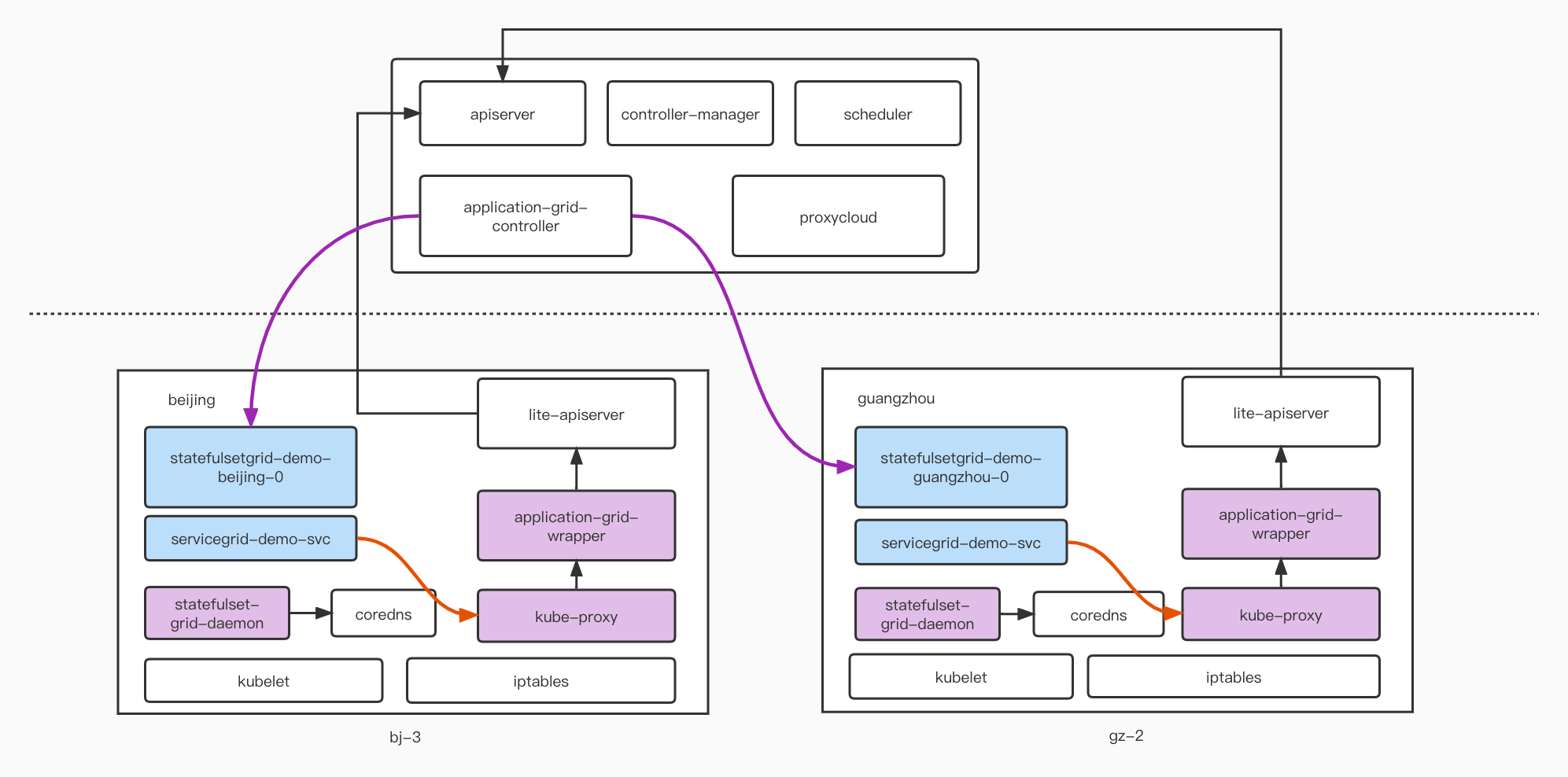

有状态 ServiceGroup

部署 StatefulSetGrid

apiVersion: superedge.io/v1kind: StatefulSetGridmetadata:name: statefulsetgrid-demonamespace: defaultspec:gridUniqKey: locationtemplate:selector:matchLabels:appGrid: echoserviceName: "servicegrid-demo-svc"replicas: 3template:metadata:labels:appGrid: echospec:terminationGracePeriodSeconds: 10containers:- image: superedge/echoserver:2.2name: echoports:- containerPort: 8080protocol: TCPenv:- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPresources: {}

部署 ServiceGrid

apiVersion: superedge.io/v1kind: ServiceGridmetadata:name: servicegrid-demonamespace: defaultspec:gridUniqKey: locationtemplate:selector:appGrid: echoports:- protocol: TCPport: 80targetPort: 8080

[~]# kubectl get ssgNAME AGEstatefulsetgrid-demo 31s[~]# kubectl get statefulsetNAME READY AGEstatefulsetgrid-demo-beijing 3/3 49sstatefulsetgrid-demo-guangzhou 3/3 49s[~]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESstatefulsetgrid-demo-beijing-0 1/1 Running 0 9s 10.0.0.67 bj-1 <none> <none>statefulsetgrid-demo-beijing-1 1/1 Running 0 8s 10.0.1.67 bj-3 <none> <none>statefulsetgrid-demo-beijing-2 1/1 Running 0 6s 10.0.0.69 bj-1 <none> <none>statefulsetgrid-demo-guangzhou-0 1/1 Running 0 9s 10.0.0.136 gz-2 <none> <none>statefulsetgrid-demo-guangzhou-1 1/1 Running 0 8s 10.0.1.7 gz-3 <none> <none>statefulsetgrid-demo-guangzhou-2 1/1 Running 0 6s 10.0.0.138 gz-2 <none> <none>[~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 172.16.33.1 <none> 443/TCP 2d2hservicegrid-demo-svc ClusterIP 172.16.33.220 <none> 80/TCP 30s[~]# kubectl describe svc servicegrid-demo-svcName: servicegrid-demo-svcNamespace: defaultLabels: superedge.io/grid-selector=servicegrid-demosuperedge.io/grid-uniq-key=locationAnnotations: topologyKeys: ["location"]Selector: appGrid=echoType: ClusterIPIP Families: <none>IP: 172.16.33.220IPs: <none>Port: <unset> 80/TCPTargetPort: 8080/TCPEndpoints: 10.0.0.136:8080,10.0.0.138:8080,10.0.0.67:8080 + 3 more...Session Affinity: NoneEvents: <none># 在 guangzhou 地域的 pod 中访问 CluserIP,会随机得到 gz-2 gz-3 节点名称,

不会访问到 beijing 区域的 Pod;使用 Service 的域名访问效果一致

[~]# curl 172.16.33.220|grep "node name"node name: gz-2[~]# curl servicegrid-demo-svc.default.svc.cluster.local|grep "node name"node name: gz-3# 在 beijing 地域的 pod 中访问 CluserIP,会随机得到 bj-1 bj-3 节点名称,

不会访问到 guangzhou 区域的 Pod;使用 Service 的域名访问效果一致

[~]# curl 172.16.33.220|grep "node name"node name: bj-1[~]# curl servicegrid-demo-svc.default.svc.cluster.local|grep "node name"node name: bj-3

-A KUBE-SERVICES -d 172.16.33.220/32 -p tcp -m comment --comment "default/servicegrid-demo-svc:

cluster IP" -m tcp --dport 80 -j KUBE-SVC-MLDT4NC26VJPGLP7

-A KUBE-SVC-MLDT4NC26VJPGLP7 -m comment --comment "default/servicegrid-demo-svc:" -m statistic --mode random

--probability 0.33333333349 -j KUBE-SEP-Z2EAS2K37V5WRDQC

-A KUBE-SVC-MLDT4NC26VJPGLP7 -m comment --comment "default/servicegrid-demo-svc:"

-m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-PREBTG6M6AFB3QA4

-A KUBE-SVC-MLDT4NC26VJPGLP7 -m comment --comment "default/servicegrid-demo-svc:" -j KUBE-SEP-URDEBXDF3DV5ITUX-A KUBE-SEP-Z2EAS2K37V5WRDQC -p tcp -m comment --comment

"default/servicegrid-demo-svc:" -m tcp -j DNAT --to-destination 10.0.0.136:8080

-A KUBE-SEP-PREBTG6M6AFB3QA4 -p tcp -m comment --comment

"default/servicegrid-demo-svc:" -m tcp -j DNAT --to-destination 10.0.0.138:8080

-A KUBE-SEP-URDEBXDF3DV5ITUX -p tcp -m comment --comment

"default/servicegrid-demo-svc:" -m tcp -j DNAT --to-destination 10.0.1.7:8080

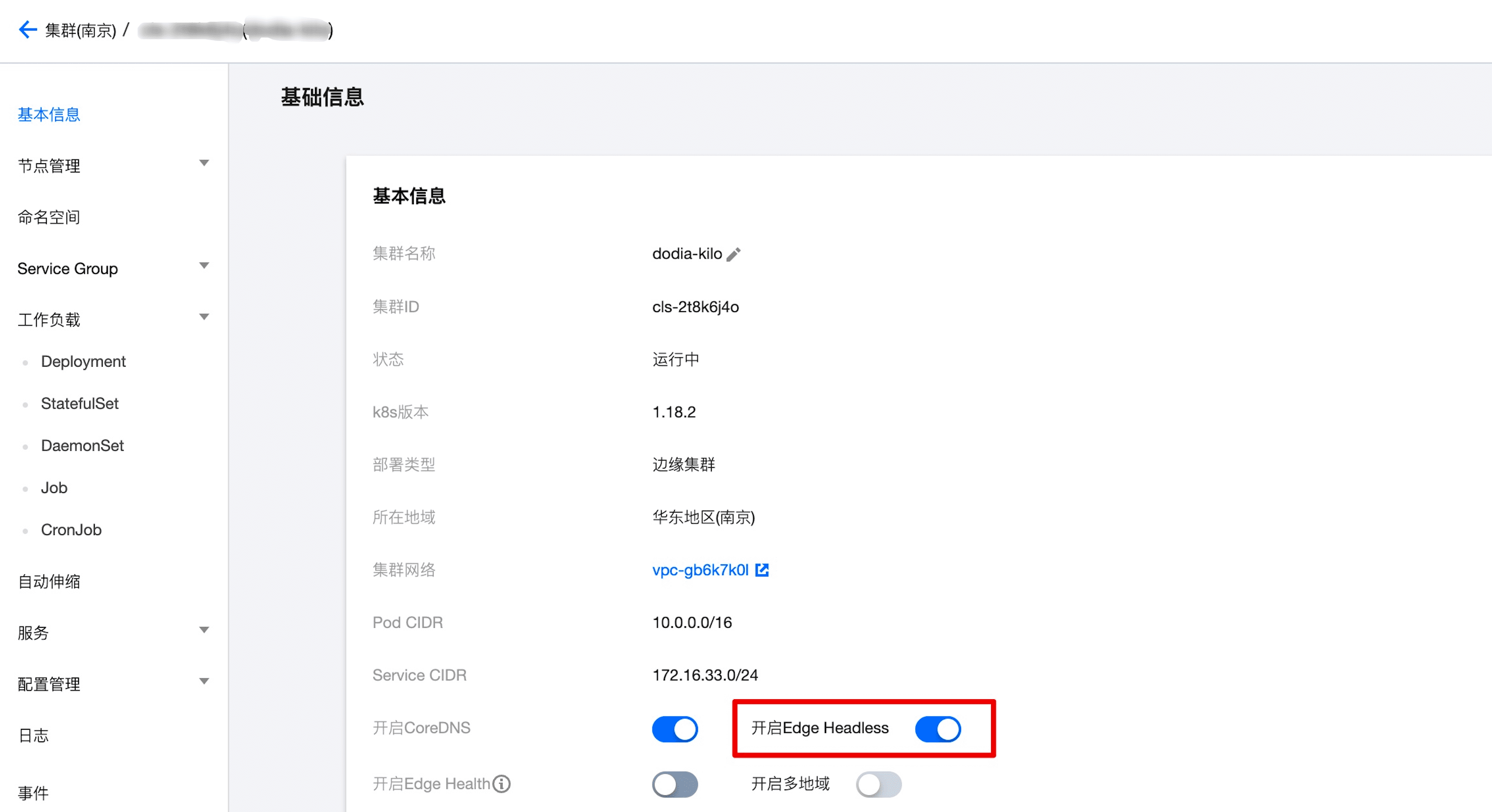

StatefulsetGrid + Headless Service 支持

部署 Headless Service

apiVersion: superedge.io/v1kind: ServiceGridmetadata:name: servicegrid-demonamespace: defaultspec:gridUniqKey: locationtemplate:clusterIP: Noneselector:appGrid: echoports:- protocol: TCPport: 8080targetPort: 8080

[~]# kubectl describe svc servicegrid-demo-svcName: servicegrid-demo-svcNamespace: defaultLabels: superedge.io/grid-selector=servicegrid-demosuperedge.io/grid-uniq-key=locationAnnotations: topologyKeys: ["location"]Selector: appGrid=echoType: ClusterIPIP Families: <none>IP: NoneIPs: <none>Port: <unset> 8080/TCPTargetPort: 8080/TCPEndpoints: 10.0.0.136:8080,10.0.0.138:8080,10.0.0.67:8080 + 3 more...Session Affinity: NoneEvents: <none>

[~]# nslookup servicegrid-demo-svc.default.svc.cluster.localServer: 169.254.20.11Address: 169.254.20.11#53Name: servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.1.7Name: servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.0.136Name: servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.0.138Name: servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.0.69Name: servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.1.67Name: servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.0.67

如何在一个 NodeUnit 内支持 Statefulset 标准访问方式?

[~]# nslookup statefulsetgrid-demo-beijing-0.servicegrid-demo-svc.default.svc.cluster.localServer: 169.254.20.11Address: 169.254.20.11#53Name: statefulsetgrid-demo-beijing-0.servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.0.67

edge-system statefulset-grid-daemon-8gtrz 1/1 Running 0 7h42m 172.16.35.193 gz-3 <none> <none>edge-system statefulset-grid-daemon-8xvrg 1/1 Running 0 7h42m 172.16.32.211 gz-2 <none> <none>edge-system statefulset-grid-daemon-ctr6w 1/1 Running 0 7h42m 192.168.10.15 bj-3 <none> <none>edge-system statefulset-grid-daemon-jnvxz 1/1 Running 0 7h42m 192.168.10.12 bj-1 <none> <none>edge-system statefulset-grid-daemon-v9llj 1/1 Running 0 7h42m 172.16.34.168 gz-1 <none> <none>edge-system statefulset-grid-daemon-w7lpt 1/1 Running 0 7h42m 192.168.10.7 bj-2 <none> <none>

{StatefulSet}-{0..N-1}.SVC.default.svc.cluster.local

{StatefulSet}-{NodeUnit}-{0..N-1}.SVC.default.svc.cluster.local

# 在 guangzhou 地域执行 nslookup 指令[~]# nslookup statefulsetgrid-demo-0.servicegrid-demo-svc.default.svc.cluster.localServer: 169.254.20.11Address: 169.254.20.11#53Name: statefulsetgrid-demo-0.servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.0.136[~]# nslookup statefulsetgrid-demo-guangzhou-0.servicegrid-demo-svc.default.svc.cluster.localServer: 169.254.20.11Address: 169.254.20.11#53Name: statefulsetgrid-demo-guangzhou-0.servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.0.136# 在 beijing 地域执行 nslookup 指令[~]# nslookup statefulsetgrid-demo-0.servicegrid-demo-svc.default.svc.cluster.localServer: 169.254.20.11Address: 169.254.20.11#53Name: statefulsetgrid-demo-0.servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.0.67[~]# nslookup statefulsetgrid-demo-beijing-0.servicegrid-demo-svc.default.svc.cluster.localServer: 169.254.20.11Address: 169.254.20.11#53Name: statefulsetgrid-demo-beijing-0.servicegrid-demo-svc.default.svc.cluster.localAddress: 10.0.0.67

# 在 guangzhou 区域执行下面的命令[~]# curl statefulsetgrid-demo-0.servicegrid-demo-svc.default.svc.cluster.local:8080 | grep "pod name:"pod name: statefulsetgrid-demo-guangzhou-0[~]# curl statefulsetgrid-demo-1.servicegrid-demo-svc.default.svc.cluster.local:8080 | grep "pod name:"pod name: statefulsetgrid-demo-guangzhou-1[~]# curl statefulsetgrid-demo-2.servicegrid-demo-svc.default.svc.cluster.local:8080 | grep "pod name:"pod name: statefulsetgrid-demo-guangzhou-2# 在 beijing 区域执行下面的命令[~]# curl statefulsetgrid-demo-0.servicegrid-demo-svc.default.svc.cluster.local:8080 | grep "pod name:"pod name: statefulsetgrid-demo-beijing-0[~]# curl statefulsetgrid-demo-1.servicegrid-demo-svc.default.svc.cluster.local:8080 | grep "pod name:"pod name: statefulsetgrid-demo-beijing-1[~]# curl statefulsetgrid-demo-2.servicegrid-demo-svc.default.svc.cluster.local:8080 | grep "pod name:"pod name: statefulsetgrid-demo-beijing-2

实现原理

按 NodeUnit 灰度

重要字段

|

字段

|

说明

|

|

templatePool

|

用于灰度的 template 集合

|

|

templates

|

NodeUnit 和其使用的 templatePool 中的 template 的映射关系,如果没有指定,NodeUnit 使用 defaultTemplateName 指定的 template

|

|

defaultTemplateName

|

默认使用的 template,如果不填写或者使用"default"就采用 spec.template

|

|

autoDeleteUnusedTemplate

|

默认为 false,如果设置为 true,会自动删除 templatePool 中既不在 templates 中也不在 spec.template 中的 template 模板

|

使用相同的 template 创建 workload

使用不同的 template 创建 workload

apiVersion: superedge.io/v1kind: DeploymentGridmetadata:name: deploymentgrid-demonamespace: defaultspec:defaultTemplateName: test1gridUniqKey: zonetemplate:replicas: 1selector:matchLabels:appGrid: echostrategy: {}template:metadata:creationTimestamp: nulllabels:appGrid: echospec:containers:- image: superedge/echoserver:2.2name: echoports:- containerPort: 8080protocol: TCPenv:- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPresources: {}templatePool:test1:replicas: 2selector:matchLabels:appGrid: echostrategy: {}template:metadata:creationTimestamp: nulllabels:appGrid: echospec:containers:- image: superedge/echoserver:2.2name: echoports:- containerPort: 8080protocol: TCPenv:- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPresources: {}test2:replicas: 3selector:matchLabels:appGrid: echostrategy: {}template:metadata:creationTimestamp: nulllabels:appGrid: echospec:containers:- image: superedge/echoserver:2.3name: echoports:- containerPort: 8080protocol: TCPenv:- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPresources: {}templates:zone1: test1zone2: test2

多集群分发

特点

前置条件

重要字段

使用示例

apiVersion: superedge.io/v1kind: DeploymentGridmetadata:name: deploymentgrid-demonamespace: defaultlabels:superedge.io/fed: "yes"spec:defaultTemplateName: test1gridUniqKey: zonetemplate:replicas: 1selector:matchLabels:appGrid: echostrategy: {}template:metadata:creationTimestamp: nulllabels:appGrid: echospec:containers:- image: superedge/echoserver:2.2name: echoports:- containerPort: 8080protocol: TCPenv:- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPresources: {}templatePool:test1:replicas: 2selector:matchLabels:appGrid: echostrategy: {}template:metadata:creationTimestamp: nulllabels:appGrid: echospec:containers:- image: superedge/echoserver:2.2name: echoports:- containerPort: 8080protocol: TCPenv:- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPresources: {}test2:replicas: 3selector:matchLabels:appGrid: echostrategy: {}template:metadata:creationTimestamp: nulllabels:appGrid: echospec:containers:- image: superedge/echoserver:2.2name: echoports:- containerPort: 8080protocol: TCPenv:- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPresources: {}templates:zone1: test1zone2: test2

[root@VM-0-174-centos ~]# kubectl get deployNAME READY UP-TO-DATE AVAILABLE AGEdeploymentgrid-demo-zone1 2/2 2 2 99s

[root@VM-0-42-centos ~]# kubectl get deployNAME READY UP-TO-DATE AVAILABLE AGEdeploymentgrid-demo-zone2 3/3 3 3 6s

status:states:zone1:conditions:- lastTransitionTime: "2021-06-17T07:33:50Z"lastUpdateTime: "2021-06-17T07:33:50Z"message: Deployment has minimum availability.reason: MinimumReplicasAvailablestatus: "True"type: AvailablereadyReplicas: 2replicas: 2zone2:conditions:- lastTransitionTime: "2021-06-17T07:37:12Z"lastUpdateTime: "2021-06-17T07:37:12Z"message: Deployment has minimum availability.reason: MinimumReplicasAvailablestatus: "True"type: AvailablereadyReplicas: 3replicas: 3